A Complete End-to-End Workflow for untargeted LC-MS/MS Metabolomics Data Analysis in R

Philippine Louail, Anna Tagliaferri, Vinicius Verri Hernandes, Daniel M. S. Silva, Johannes Rainer

2023-09-07

Source:vignettes/end-to-end-untargeted-metabolomics.Rmd

end-to-end-untargeted-metabolomics.RmdAbstract

Metabolomics provides a real-time view of the metabolic state of examined samples, with mass spectrometry serving as a key tool in deciphering intricate differences in metabolomes due to specific factors. In the context of metabolomic investigations, untargeted liquid chromatography with tandem mass spectrometry (LC-MS/MS) emerges as a powerful approach thanks to its versatility and resolution. This paper focuses on a dataset aimed at identifying differences in plasma metabolite levels between individuals suffering from a cardiovascular disease and healthy controls.

Despite the potential insights offered by untargeted LC-MS/MS data, a significant challenge in the field lies in the generation of reproducible and scalable analysis workflows. This struggle is due to the aforementioned high versatility of the technique, which results in difficulty in having a one-size-fits-all workflow and software that will adapt to all experimental setups. The power of R-based analysis workflows lies in their high customizability and adaptability to specific instrumental and experimental setups; however, while various specialized packages exist for individual analysis steps, their seamless integration and application to large cohort datasets remain elusive. Addressing this gap, we present an innovative R workflow that leverages xcms, and packages of the RforMassSpectrometry environment to encompass all aspects of pre-processing and downstream analyses for LC-MS/MS datasets in a reproducible manner and allow an easy customization to generate data-set specific workflows. Our workflow seamlessly integrates with Bioconductor packages, offering adaptability to diverse study designs and analysis requirements.

Keyword

LC-MS/MS, reproducibility, workflow, xcms, R, normalization, feature identification, Bioconductor,…

Introduction

Liquid chromatography coupled to tandem mass spectrometry (LC-MS/MS) is a powerful tool for metabolomics investigations, providing a comprehensive view of the metabolome. It enables the identification of a large number of metabolites and their relative abundance in biological samples. Liquid Chromatography (LC) is a separation technique which relies on the different interactions of the analytes towards a chromatographic column - the stationary phase - and the eluent of the analysis - the mobile phase. The stronger the affinity of an analyte to the stationary phase - dictated by polarity, size, charges and other parameters - the longer it will take for the compound to leave the column and be detected by our coupled technique - the Mass Spectrometer.

Mass Spectrometry allows to identify and quantify ions based on their mass-to-charge (m/z) ratio. Its high selectivity relies not only on the capability to separate compounds with very small variations in mass, but also on the capacity to promote fragmentation. An ion with an initial m/z (the parent ion) can be broken into characteristic fragments (daughter ions), which would then help in the structure elucidation and identification of that specific compound (Theodoridis et al. 2012).

Therefore, LC-MS/MS data are usually tridimensional datasets containing the retention time of the compounds after separation by LC, the detected m/z of all the compounds at a given time, and the intensity of each of such signals. Furthermore, each MS signal can have two different levels, corresponding to the signal of the parent ion (called MS1) and the signals from their corresponding fragments (denominanted MS2).

The high sensitivity and specificity of LC-MS/MS make it an indispensable tool for biomarker discovery and elucidating metabolic pathways. The untargeted approach is particularly useful for hypothesis-free investigations, allowing for the detection of unexpected metabolites and pathways. However, the analysis of LC-MS/MS data is complex and requires a series of preprocessing steps to extract meaningful information from the raw data. The main challenges include dealing with the lack of ground truth data, the high dimensionality of the data, and the presence of noise and artifacts (Gika, Wilson, and Theodoridis 2014). Moreover, due to different instrumental setups and protocols the definition of a single one-fits-all workflow is impossible. Finally, while specialized software packages exist for each individual step during an analysis, their seamless integration remains elusive.

Here we present a complete analysis workflow for untargeted LC-MS/MS data using R and Bioconductor packages, in particular those from the RforMassSpectrometry package ecosystem. The later is an initiative initiative that aims to implement an expandable, flexible infrastructure for the analysis of MS data, providing also a comprehensive toolbox of functions to build customized analysis workflows. We demonstrate how the various algorithms can be adapted to the particular data set and how various R packages can be seamlessly integrated to ensure efficient and reproducible processing. The present workflow covers all steps for LC-MS/MS data analysis, from preprocessing, data normalization, differential abundance analysis to the annotation of the significant features i.e., collections of signals that have the same retention time and mass-to-charge ratios pertaining to the same ions. Various options for visualizations as well as quality assessment are presented for all analysis steps.

Data description

In this workflow two datasets are utilized, an LC-MS-based (MS1 level only) untargeted metabolomics data set to quantify small polar metabolites in human plasma samples and an additional LC-MS/MS data set of selected samples from the former study for the identification/annotation of its significant features. The samples used here were randomly selected from a larger study for the identification of metabolites with differences in abundances between individuals suffering from a cardiovascular disease (CVD) and healthy controls (CTR).The subset analyzed here comprises data for three CVD and three CTR as well as four quality control (QC) samples. The QC samples represent a pool of serum samples from a large cohort and were repeatedly measured throughout the experiment to monitor stability of the signal.

The data and metadata for this workflow are accessible on the MetaboLight database under the ID: MTBLS8735.

The detailed materials and method used for the analysis of the samples can also be found on the metabolight database. As it is especially pertinent for the analysis and the chosen parameters, we want to highlight that the samples were analyzed using ultra-high-performance liquid chromatography (UHPLC) coupled to a Q-TOF mass spectrometer (TripleTOF 5600+). The chromatographic separation was based on hydrophilic interaction liquid chromatography (HILIC).

Workflow description

The present workflow describes all steps for the analysis of an LC-MS/MS experiment, which includes the preprocessing of the raw data to generate the abundance matrix for the features in the various samples, followed by data normalization, differential abundance analysis and finally the annotation of features to metabolites. Note that also alternative analysis options and R packages could be used for different steps and some examples are mentioned throughout the workflow. [jo: I’ll include some of these maybe later. It would be key to justify why this workflow is comprehensive]

Our workflow is therefore based on the following dependencies:

## General bioconductor package

library(Biobase)

## Data Import and handling

library(readxl)

library(MsExperiment)

library(MsIO)

library(MsBackendMetaboLights)

library(SummarizedExperiment)

## Preprocessing of LC-MS data

library(xcms)

library(Spectra)

library(MetaboCoreUtils)

## Statistical analysis

library(limma) # Differential abundance

library(matrixStats) # Summaries over matrices

## Visualisation

library(pander)

library(RColorBrewer)

library(pheatmap)

library(vioplot)

library(ggfortify) # Plot PCA

library(gridExtra) # To arrange multiple ggplots into single plots

## Annotation

library(AnnotationHub) # Annotation resources

library(CompoundDb) # Access small compound annotation data.

library(MetaboAnnotation) # Functionality for metabolite annotation.Data import

Note that different equipment will generate various file extensions, so a conversion step might be needed beforehand, though it does not apply to this dataset. The Spectra package supports a variety of ways to store and retrieve MS data, including mzML, mzXML, CDF files, simple flat files, or database systems. If necessary, several tools, such as ProteoWizard’s MSConvert, can be used to convert files to the .mzML format (Chambers et al. 2012).

Below we will show how to extract our dataset from the MetaboLigths

database and load it as an MsExperiment object. For more

information on how to load your data from the MetaboLights database,

refer to the vignette.

For other type of data loading, check out this link:

param <- MetaboLightsParam(mtblsId = "MTBLS8735",

assayName = paste0("a_MTBLS8735_LC-MS_positive_",

"hilic_metabolite_profiling.txt"),

filePattern = ".mzML")

data <- readMsObject(MsExperiment(),

param,

keepOntology = FALSE,

keepProtocol = FALSE,

simplify = TRUE)We next configure the parallel processing setup. Most functions from the xcms package allow per-sample parallel processing, which can improve the performance of the analysis, especially for large data sets. xcms and all packages from the RforMassSpectrometry package ecosystem use the parallel processing setup configured through the BiocParallel Bioconductor package. With the code below we use a fork-based parallel processing on unix system, and a socket-based parallel processing on the Windows operating system.

#' Set up parallel processing using 2 cores

if (.Platform$OS.type == "unix") {

register(MulticoreParam(2))

} else{

register(SnowParam(2))

}Data organisation

The experimental data is now represented by a

MsExperiment object from the MsExperiment

package. The MsExperiment object is a container for

metadata and spectral data that provides and manages also the linkage

between samples and spectra.

data## Object of class MsExperiment

## Spectra: MS1 (17210)

## Experiment data: 10 sample(s)

## Sample data links:

## - spectra: 10 sample(s) to 17210 element(s).Below we provide a brief overview of the data structure and content.

The sampleData() function extracts sample information from

the object. We next extract this data and use the pander

package to render and show this information in Table 1 below. Throughout

the document we use the R pipe operator (|>) to avoid

nested function calls and hence improve code readability.

| Derived_Spectral_Data_File | Characteristics.Sample.type. |

|---|---|

| FILES/MS_QC_POOL_1_POS.mzML | pool |

| FILES/MS_A_POS.mzML | experimental sample |

| FILES/MS_B_POS.mzML | experimental sample |

| FILES/MS_QC_POOL_2_POS.mzML | pool |

| FILES/MS_C_POS.mzML | experimental sample |

| FILES/MS_D_POS.mzML | experimental sample |

| FILES/MS_QC_POOL_3_POS.mzML | pool |

| FILES/MS_E_POS.mzML | experimental sample |

| FILES/MS_F_POS.mzML | experimental sample |

| FILES/MS_QC_POOL_4_POS.mzML | pool |

| Factor.Value.Phenotype. | Sample.Name | Factor.Value.Age. |

|---|---|---|

| POOL | NA | |

| CVD | A | 53 |

| CTR | B | 30 |

| POOL | NA | |

| CTR | C | 66 |

| CVD | D | 36 |

| POOL | NA | |

| CTR | E | 66 |

| CVD | F | 44 |

| POOL | NA |

| derived_spectra_data_file | phenotype | sample_name | age |

|---|---|---|---|

| FILES/MS_QC_POOL_1_POS.mzML | QC | POOL1 | NA |

| FILES/MS_A_POS.mzML | CVD | A | 53 |

| FILES/MS_B_POS.mzML | CTR | B | 30 |

| FILES/MS_QC_POOL_2_POS.mzML | QC | POOL2 | NA |

| FILES/MS_C_POS.mzML | CTR | C | 66 |

| FILES/MS_D_POS.mzML | CVD | D | 36 |

| FILES/MS_QC_POOL_3_POS.mzML | QC | POOL3 | NA |

| FILES/MS_E_POS.mzML | CTR | E | 66 |

| FILES/MS_F_POS.mzML | CVD | F | 44 |

| FILES/MS_QC_POOL_4_POS.mzML | QC | POOL4 | NA |

| injection_index |

|---|

| 1 |

| 2 |

| 3 |

| 4 |

| 5 |

| 6 |

| 7 |

| 8 |

| 9 |

| 10 |

There are 11 samples in this data set. Below are abbreviations essential for proper interpretation of this metadata information:

- injection_index: An index representing the order (position) in which an individual sample was measured (injected) within the LC-MS measurement run of the experiment.

-

phenotype: The sample groups of the experiment:

-

"QC": Quality control sample (pool of serum samples from an external, large cohort). -

"CVD": Sample from an individual with a cardiovascular disease. -

"CTR": Sample from a presumably healthy control.

-

- sample_name: An arbitrary name/identifier of the sample.

- age: The (rounded) age of the individuals.

We will define colors for each of the sample groups based on their sample group using the RColorBrewer package:

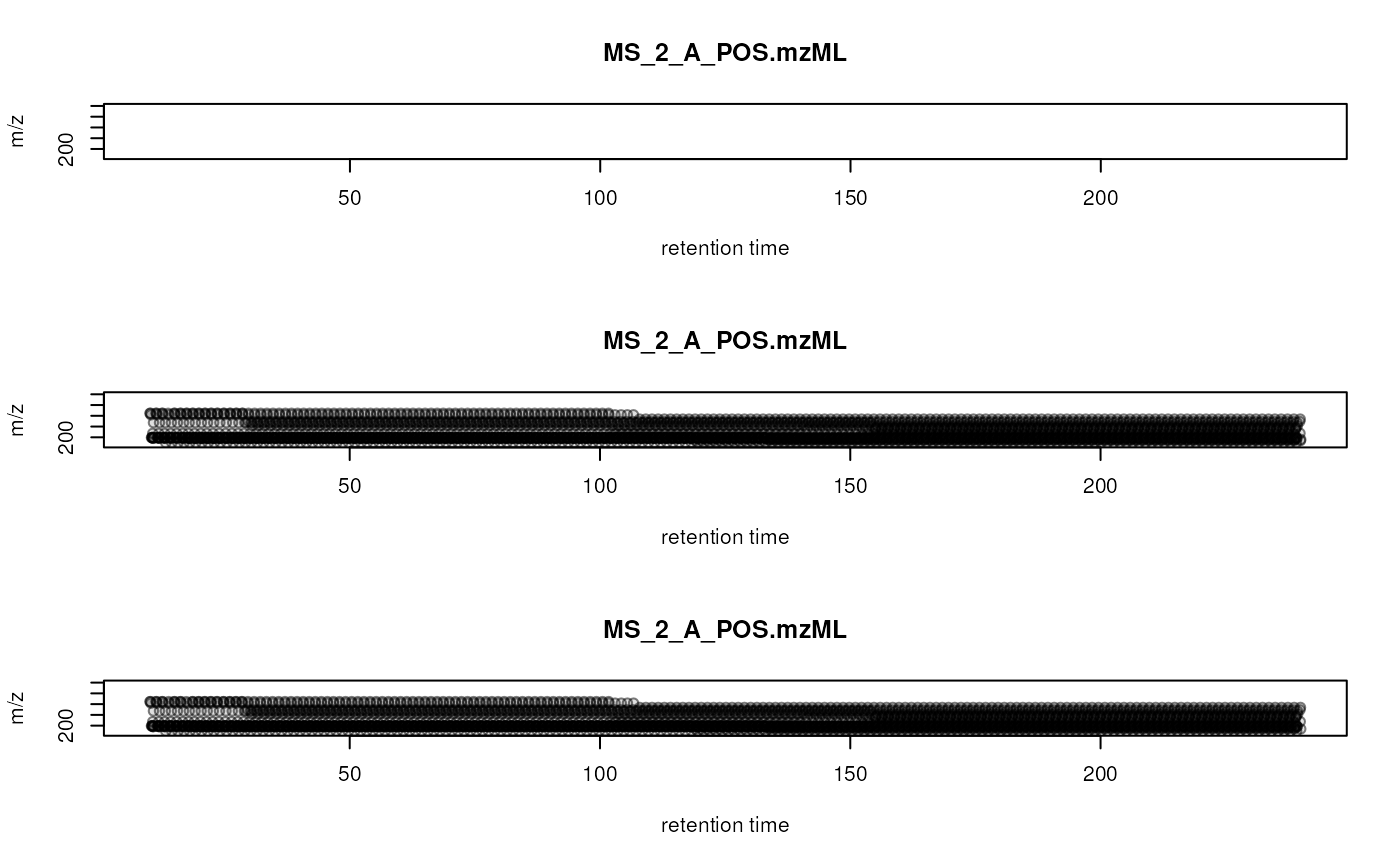

The MS data of this experiment is stored as a Spectra

object (from the Spectra

Bioconductor package) within the MsExperiment object and

can be accessed using spectra() function. Each element in

this object is a spectrum - they are organised linearly and are all

combined in the same Spectra object one after the other

(ordered by retention time and samples).

Below we access the dataset’s Spectra object which will

summarize its available information and provide, among other things, the

total number of spectra of the data set.

#' Access Spectra Object

spectra(data)## MSn data (Spectra) with 17210 spectra in a MsBackendMetaboLights backend:

## msLevel rtime scanIndex

## <integer> <numeric> <integer>

## 1 1 0.274 1

## 2 1 0.553 2

## 3 1 0.832 3

## 4 1 1.111 4

## 5 1 1.390 5

## ... ... ... ...

## 17206 1 479.052 1717

## 17207 1 479.331 1718

## 17208 1 479.610 1719

## 17209 1 479.889 1720

## 17210 1 480.168 1721

## ... 36 more variables/columns.

##

## file(s):

## MS_QC_POOL_1_POS.mzML

## MS_A_POS.mzML

## MS_B_POS.mzML

## ... 7 more filesWe can also summarize the number of spectra and their respective MS

level (extracted with the msLevel() function). The

fromFile() function returns for each spectrum the index of

its sample (data file) and can thus be used to split the information (MS

level in this case) by sample to further summarize using the base R

table() function and combine the result into a matrix. Note

that this is the number of spectra acquired at each run, and not the

number of spectral features in each sample.

#' Count the number of spectra with a specific MS level per file.

spectra(data) |>

msLevel() |>

split(fromFile(data)) |>

lapply(table) |>

do.call(what = cbind)## 1 2 3 4 5 6 7 8 9 10

## 1 1721 1721 1721 1721 1721 1721 1721 1721 1721 1721The present data set thus contains only MS1 data, which is ideal for quantification of the signal. A second (LC-MS/MS) data set also with fragment (MS2) spectra of the same samples will be used later on in the workflow.

Note that users should not restrict themselves to data evaluation examples shown here or in other tutorials. The Spectra package enables user-friendly access to the full MS data and its functionality should be extensively used to explore, visualize and summarize the data.

As another example, we below determine the retention time range for the entire data set.

## [1] 0.273 480.169Data obtained from LC-MS experiments are typically analyzed along the retention time axis, while MS data is organized by spectrum, orthogonal to the retention time axis.

Data visualization and general quality assessment

Effective visualization is paramount for inspecting and assessing the quality of MS data. For a general overview of our LC-MS data, we can:

- Combine all mass peaks from all (MS1) spectra of a sample into a single spectrum in which each mass peak then represents the maximum signal of all mass peaks with a similar m/z. This spectrum might then be called Base Peak Spectrum (BPS), providing information on the most abundant ions of a sample.

- Aggregate mass peak intensities for each spectrum, resulting in the Base Peak Chromatogram (BPC). The BPC shows the highest measured intensity for each distinct retention time (hence spectrum) and is thus orthogonal to the BPS.

- Sum the mass peak intensities for each spectrum to create a Total Ion Chromatogram (TIC).

- Compare the BPS of all samples in an experiment to evaluate similarity of their ion content.

- Compare the BPC of all samples in an experiment to identify samples with similar or dissimilar chromatographic signal.

In addition to such general data evaluation and visualization, it is also crucial to investigate specific signal of e.g. internal standards or compounds/ions known to be present in the samples. By providing a reliable reference, internal standards help achieve consistent and accurate analytical results.

Spectra Data Visualization: BPS

The BPS collapses data in the retention time dimension and reveals the most prevalent ions present in each of the samples, creation of such BPS is however not straightforward. Mass peaks, even if representing signals from the same ion, will never have identical m/z values in consecutive spectra due to the measurement error/resolution of the instrument.

Below we use the combineSpectra function to combine all

spectra from one file (defined using parameter

f = fromFile(data)) into a single spectrum. All mass peaks

with a difference in m/z value smaller than 3 parts-per-million

(ppm) are combined into one mass peak, with an intensity representing

the maximum of all such grouped mass peaks. To reduce memory

requirement, we in addition first bin each spectrum combining

all mass peaks within a spectrum, aggregating mass peaks into bins with

0.01 m/z width. In case of large datasets, it is also

recommended to set the processingChunkSize() parameter of

the MsExperiment object to a finite value (default is

Inf) causing the data to be processed (and loaded into

memory) in chunks of processingChunkSize() spectra. This

can reduce memory demand and speed up the process.

#' Setting the chunksize

chunksize <- 1000

processingChunkSize(spectra(data)) <- chunksizeWe can now generate BPS for each sample and plot()

them.

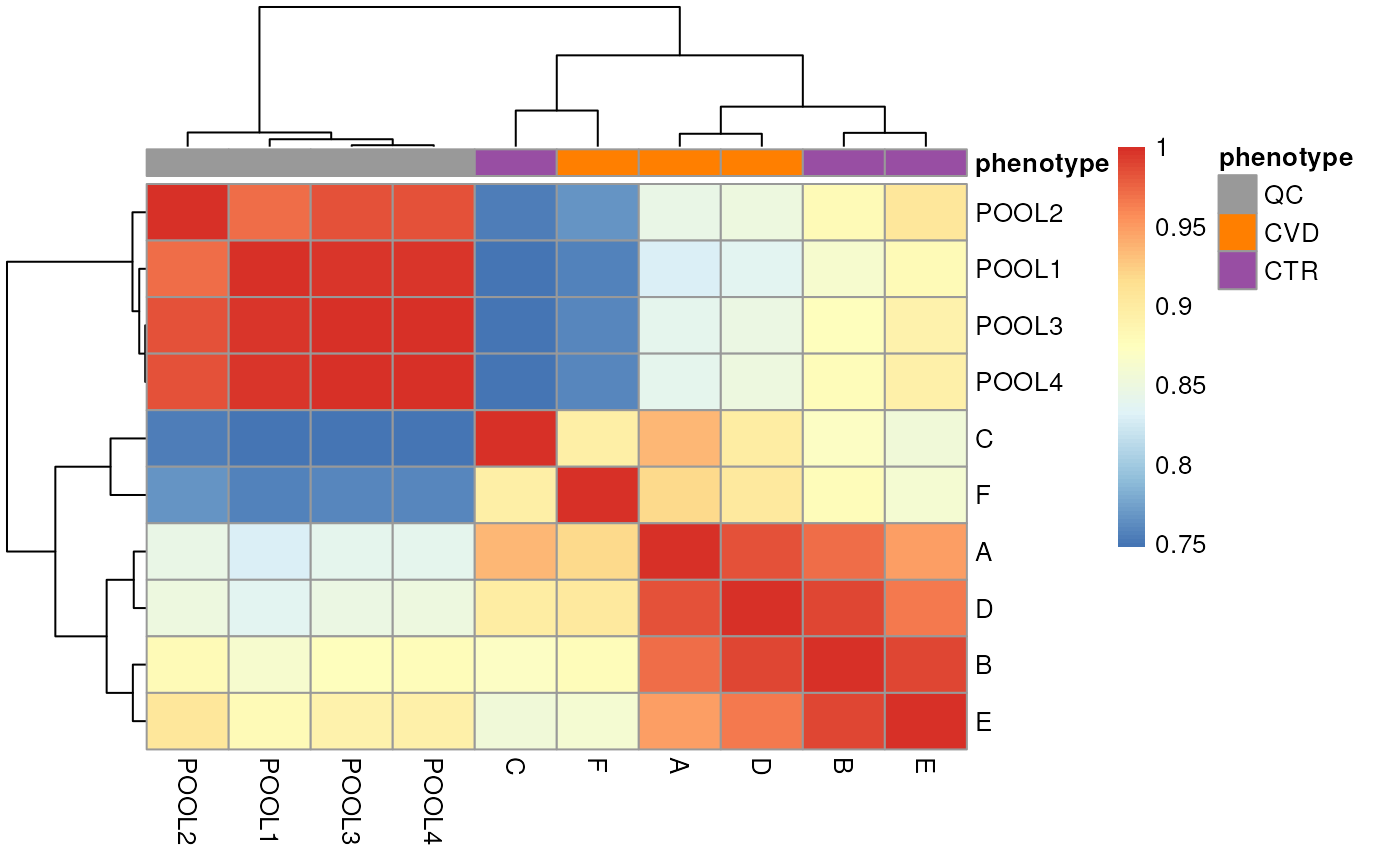

Here, there is observable overlap in ion content between the files, particularly around 300 m/z and 700 m/z. There are however also differences between sets of samples. In particular, BPS 1, 4, 7 and 10 (counting row-wise from left to right) seem different than the others. In fact, these four BPS are from QC samples, and the remaining six from the study samples. The observed differences might be explained by the fact that the QC samples are pools of serum samples from a different cohort, while the study samples represent plasma samples, from a different sample collection.

Next to the visual inspection above, we can also calculate and

express the similarity between the BPS with a heatmap. Below we use the

compareSpectra() function to calculate pairwise

similarities between all BPS and use then the pheatmap()

function from the pheatmap package to cluster and visualize

this result.

We get a first glance at how our different samples distribute in terms of similarity. The heatmap confirms the observations made with the BPS, showing distinct clusters for the QCs and the study samples, owing to the different matrices and sample collections.

It is also strongly recommended to delve deeper into the data by exploring it in more detail. This can be accomplished by carefully assessing our data and extracting spectra or regions of interest for further examination. In the next chunk, we will look at how to extract information for a specific spectrum from distinct samples.

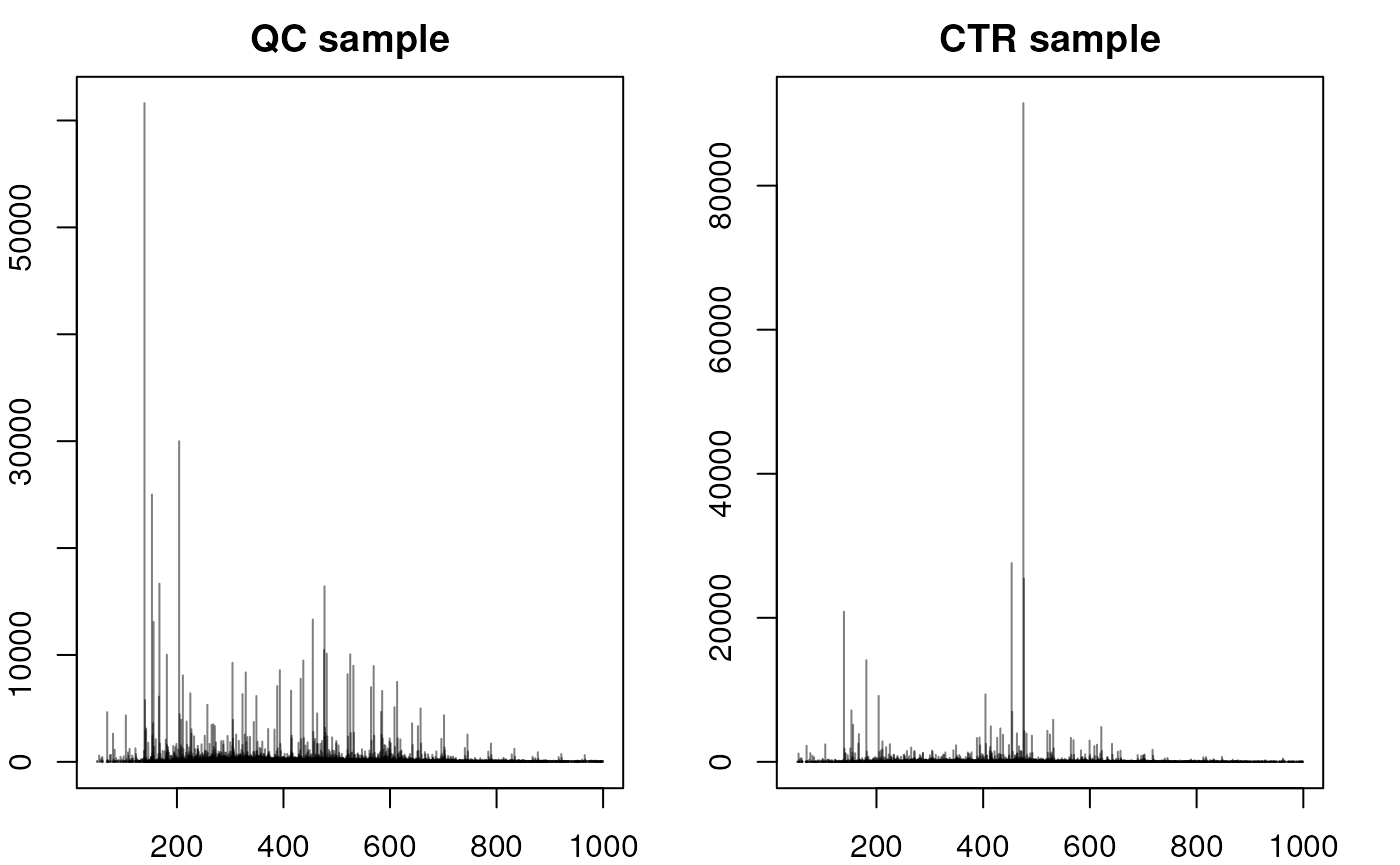

#' Accessing a single spectrum - comparing with QC

par(mfrow = c(1,2), mar = c(2, 2, 2, 2))

spec1 <- spectra(data[1])[125]

spec2 <- spectra(data[3])[125]

plotSpectra(spec1, main = "QC sample")

plotSpectra(spec2, main = "CTR sample")

The significant dissimilarities in peak distribution and intensity confirm the difference in composition between QCs and study samples. We next compare a full MS1 spectrum from a CVD and a CTR sample.

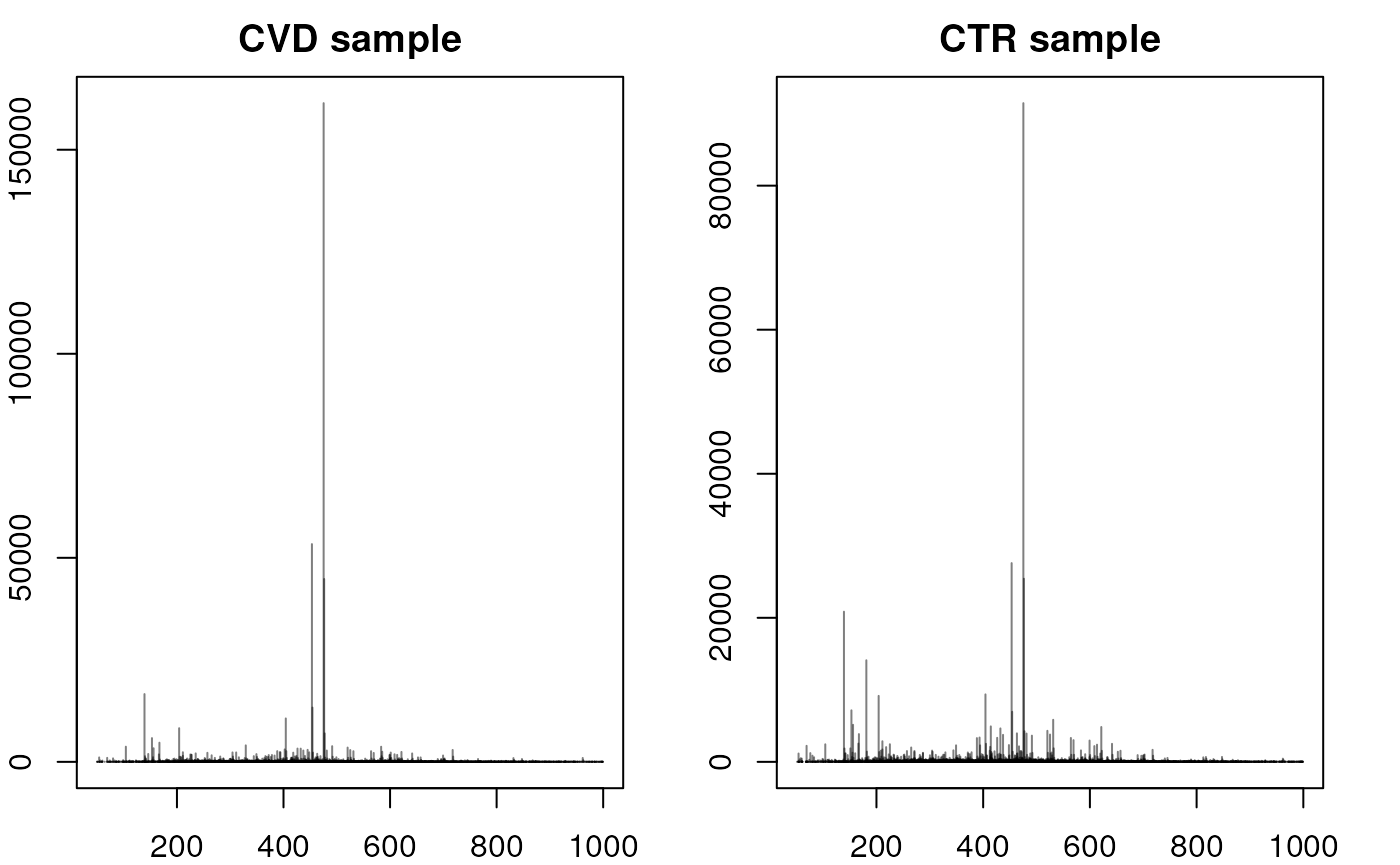

#' Accessing a single spectrum - comparing CVD and CTR

par(mfrow = c(1,2), mar = c(2, 2, 2, 2))

spec1 <- spectra(data[2])[125]

spec2 <- spectra(data[3])[125]

plotSpectra(spec1, main = "CVD sample")

plotSpectra(spec2, main = "CTR sample")

Above, we can observe that the spectra between CVD and CTR samples are not entirely similar, but they do exhibit similar main peaks between 200 and 600 m/z with a general higher intensity in control samples. However the peak distribution (or at least intensity) seems to vary the most between an m/z of 10 to 210 and after an m/z of 600.

The CTR spectrum above exhibits significant peaks around an m/z of 150 - 200 that have a much lower intensity in the CVD sample. To delve into more details about this specific spectrum, a wide range of functions can be employed:

#' Checking its intensity

intensity(spec2)NumericList of length 1 [[1]] 18.3266733266736 45.1666666666667 … 27.1048951048951 34.9020979020979

#' Checking its rtime

rtime(spec2)[1] 34.872

#' Checking its m/z

mz(spec2)NumericList of length 1 [[1]] 51.1677328505635 53.0461968245186 … 999.139446289161 999.315208803072

#' Filtering for a specific m/z range and viewing in a tabular format

filt_spec <- filterMzRange(spec2,c(50,200))

data.frame(intensity = unlist(intensity(filt_spec)),

mz = unlist(mz(filt_spec))) |>

head() |>

pandoc.table(style = "rmarkdown", caption = "Table 2. Intensity and m/z

values of the 125th spectrum of one CTR sample.")| intensity | mz |

|---|---|

| 18.33 | 51.17 |

| 45.17 | 53.05 |

| 23.08 | 53.7 |

| 41.36 | 53.84 |

| 32.87 | 53.88 |

| 1159 | 54.01 |

Chromatographic Data Visualization: BPC and TIC

The chromatogram() function facilitates the extraction

of intensities along the retention time. However, access to

chromatographic information is currently not as efficient and seamless

as it is for spectral information. Work is underway to develop/improve

the infrastructure for chromatographic data through a new

Chromatograms object aimed to be as flexible and

user-friendly as the Spectra object.

For visualizing LC-MS data, a BPC or TIC serves as a valuable tool to

assess the performance of liquid chromatography across various samples

in an experiment. In our case, we extract the BPC from our data to

create such a plot. The BPC captures the maximum peak signal from each

spectrum in a data file and plots this information against the retention

time for that spectrum on the y-axis. The BPC can be extracted using the

chromatogram function.

By setting the parameter aggregationFun = "max", we

instruct the function to report the maximum signal per spectrum.

Conversely, when setting aggregationFun = "sum", it sums up

all intensities of a spectrum, thereby creating a TIC.

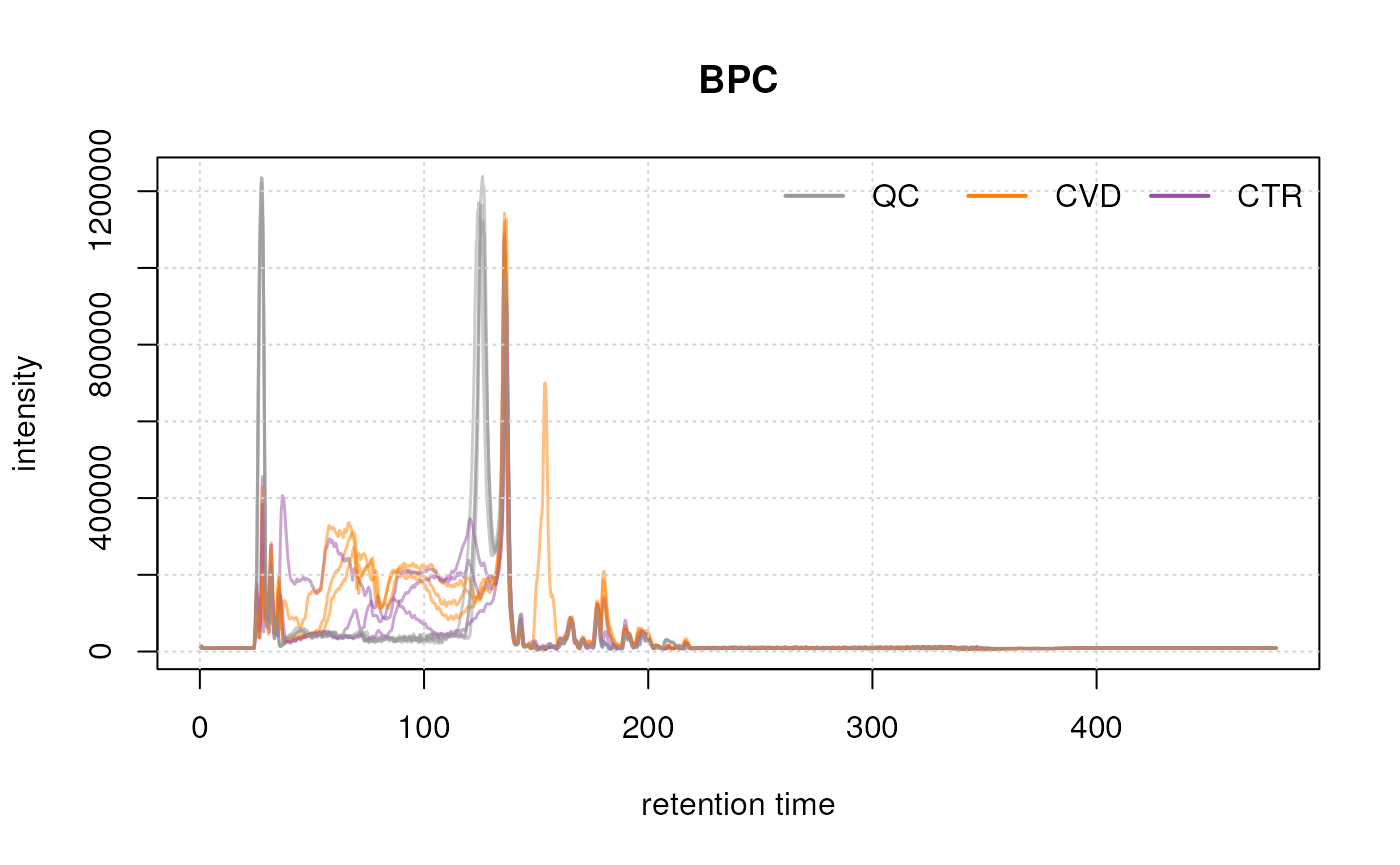

#' Extract and plot BPC for full data

bpc <- chromatogram(data, aggregationFun = "max")

plot(bpc, col = paste0(col_sample, 80), main = "BPC", lwd = 1.5)

grid()

legend("topright", col = col_phenotype,

legend = names(col_phenotype), lty = 1, lwd = 2, horiz = TRUE, bty = "n")

After about 240 seconds no signal seems to be measured. Thus, we filter the data removing that part as well as the first 10 seconds measured in the LC run.

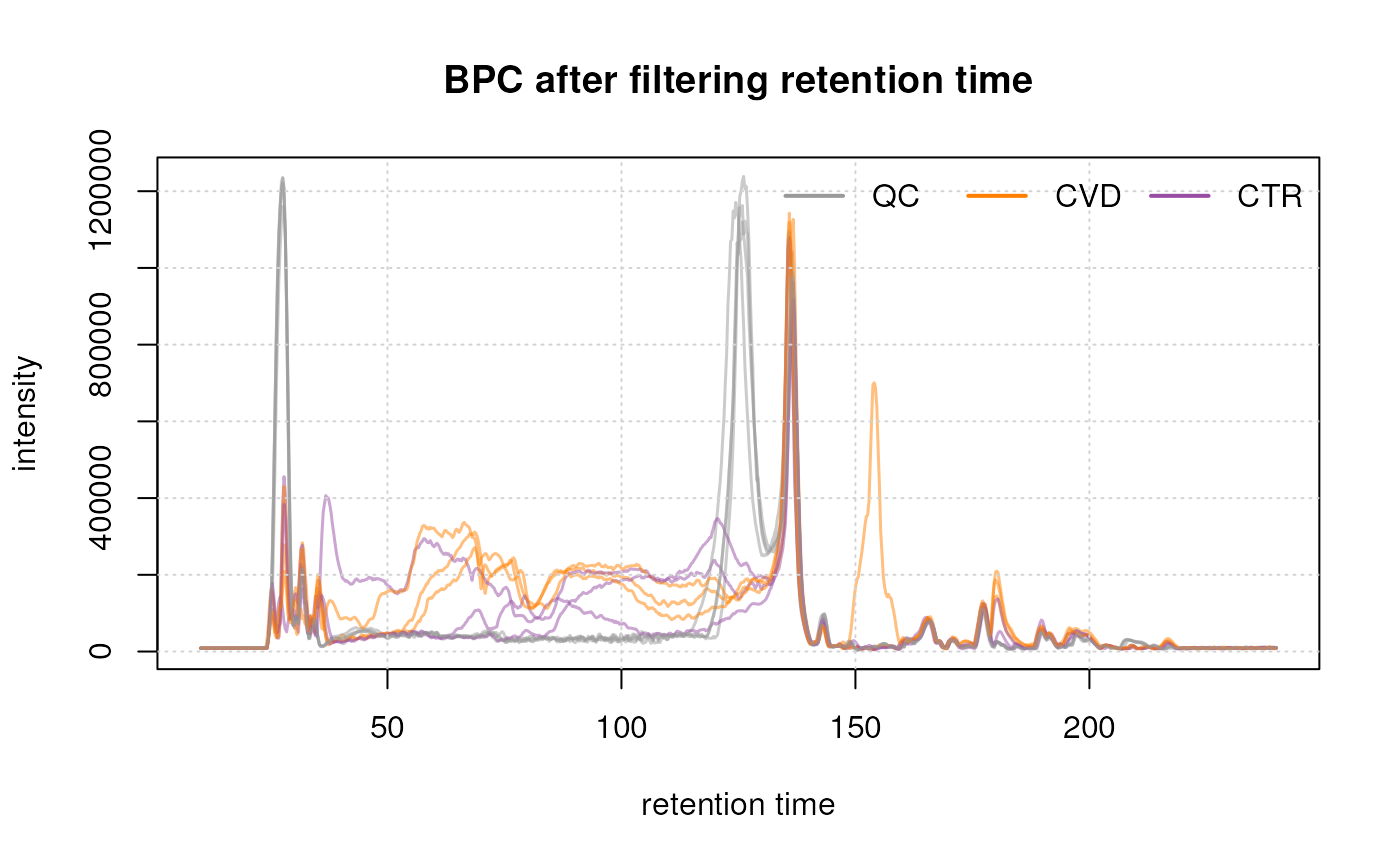

#' Filter the data based on retention time

data <- filterRt(data, c(10, 240))

bpc <- chromatogram(data, aggregationFun = "max")

#' Plot after filtering

plot(bpc, col = paste0(col_sample, 80),

main = "BPC after filtering retention time", lwd = 1.5)

grid()

legend("topright", col = col_phenotype,

legend = names(col_phenotype), lty = 1, lwd = 2, horiz = TRUE, bty = "n")

Initially, we examined the entire BPC and subsequently filtered it based on the desired retention times. This not only results in a smaller file size but also facilitates a more straightforward interpretation of the BPC.

The final plot illustrates the BPC for each sample colored by phenotype, providing insights on the signal measured along the retention times of each sample. It reveals the points at which compounds eluted from the LC column. In essence, a BPC condenses the three-dimensional LC-MS data (m/z by retention time by intensity) into two dimensions (retention time by intensity).

We can also here compare similarities of the BPCs in a heatmap. The

retention times will however not be identical between different samples.

Thus we bin() the chromatographic signal per sample along the

retention time axis into bins of two seconds resulting in data with the

same number of bins/data points. We can then calculate pairwise

similarities between these data vectors using the cor()

function and visualize the result using pheatmap().

#' Total ion chromatogram

tic <- chromatogram(data, aggregationFun = "sum") |>

bin(binSize = 2)

#' Calculate similarity (Pearson correlation) between BPCs

ticmap <- do.call(cbind, lapply(tic, intensity)) |>

cor()

rownames(ticmap) <- colnames(ticmap) <- sampleData(data)$sample_name

ann <- data.frame(phenotype = sampleData(data)[, "phenotype"])

rownames(ann) <- rownames(ticmap)

#' Plot heatmap

pheatmap(ticmap, annotation_col = ann,

annotation_colors = list(phenotype = col_phenotype))

The heatmap above reinforces what our exploration of spectra data showed, which is a strong separation between the QC and study samples. This is important to bear in mind for later analyses.

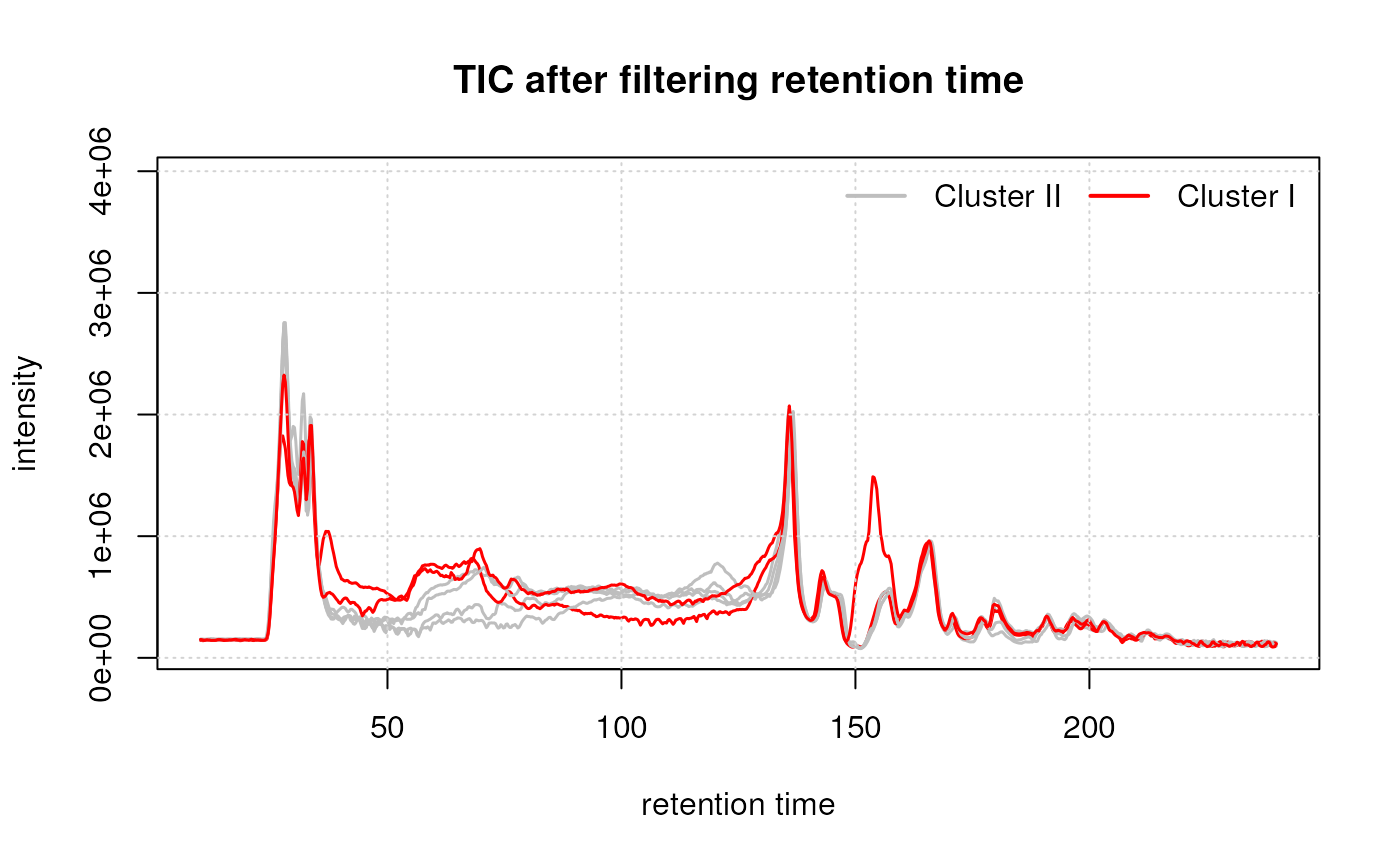

Additionally, study samples group into two clusters, cluster I containing samples C and F and cluster II with all other samples. Below we plot the TIC of all samples, using a different color for each cluster.

cluster_I_idx <- sampleData(data)$sample_name %in% c("F", "C")

cluster_II_idx <- sampleData(data)$sample_name %in% c("A", "B", "D", "E")

temp_col <- c("grey", "red")

names(temp_col) <- c("Cluster II", "Cluster I")

col <- rep(temp_col[1], length(data))

col[cluster_I_idx] <- temp_col[2]

col[sampleData(data)$phenotype == "QC"] <- NA

data |>

chromatogram(aggregationFun = "sum") |>

plot( col = col,

main = "TIC after filtering retention time", lwd = 1.5)

grid()

legend("topright", col = temp_col,

legend = names(temp_col), lty = 1, lwd = 2,

horiz = TRUE, bty = "n")

While the TIC of all samples look similar, the samples from cluster I show a different signal in the retention time range from about 40 to 160 seconds. Whether, and how strong this difference will impact the following analysis remains to be determined.

Known compounds

Throughout the entire process, it is crucial to have reference points within the dataset, such as well-known ions. Most experiments nowadays include internal standards (IS), and it was the case here. We strongly recommend using them for visualization throughout the entire analysis. For this experiment, a set of 15 IS was spiked to all samples. After reviewing signal of all of them, we selected two to guide this analysis process. However, we also advise to plot and evaluate all the ions after each steps.

To illustrate this, we generate Extracted Ion Chromatograms (EIC) for these selected test ions. By restricting the MS data to intensities within a restricted, small m/z range and a selected retention time window, EICs are expected to contain only signal from a single type of ion. The expected m/z and retention times for our set of IS was determined in a different experiment. Additionally, in cases where internal standards are not available, commonly present ions in the sample matrix can serve as suitable alternatives. Ideally, these compounds should be distributed across the entire retention time range of the experiment.

#' Load our list of standard

intern_standard <- read.delim("intern_standard_list.txt")

# Extract EICs for the list

eic_is <- chromatogram(

data,

rt = as.matrix(intern_standard[, c("rtmin", "rtmax")]),

mz = as.matrix(intern_standard[, c("mzmin", "mzmax")]))

#' Add internal standard metadata

fData(eic_is)$mz <- intern_standard$mz

fData(eic_is)$rt <- intern_standard$RT

fData(eic_is)$name <- intern_standard$name

fData(eic_is)$abbreviation <- intern_standard$abbreviation

rownames(fData(eic_is)) <- intern_standard$abbreviation

#' Summary of IS information

cpt <- paste("Table 3.Internal standard list with respective m/z and expected",

"retention time [s].")

fData(eic_is)[, c("name", "mz", "rt")] |>

as.data.frame() |>

pandoc.table(style = "rmarkdown", caption = cpt)| name | mz | |

|---|---|---|

| carnitine_d3 | Carnitine (D3) | 165.1 |

| creatinine_methyl_d3 | Creatinine (N-Methhyl_D3) | 117.1 |

| glucose_d2 | Glucose (6,6-D2) | 205.1 |

| alanine_13C_15N | L-Alanine (13C3, 99%; 15N, 99%) | 94.06 |

| arginine_13C_15N | L-Arginine HCl (13C6, 99%; 15N4, 99%) | 185.1 |

| aspartic_13C_15N | L-Aspartic acid (13C4, 99%; 15N, 99%) | 139.1 |

| cystine_13C_15N | L-Cystine (13C6, 99%; 15N2, 99%) | 249 |

| glutamic_13C_15N | L-Glutamic acid (13C5, 99%; 15N, 99%) | 154.1 |

| glycine_13C_15N | Glycine (13C2, 99%; 15N, 99%) | 79.04 |

| histidine_13C_15N | L-Histidine HCl H2O (13C6; 15N3, 99%) | 165.1 |

| isoleucine_13C_15N | L-Isoleucine (13C6, 99%; 15N, 99%) | 139.1 |

| leucine_13C_15N | L-Leucine (13C6, 99%; 15N, 99%) | 139.1 |

| lysine_13C_15N | L-Lysine 2HCl (13C6, 99%; 15N2, 99%) | 155.1 |

| methionine_13C_15N | L-Methionine (13C5, 99%; 15N, 99%) | 156.1 |

| phenylalanine_13C_15N | L-Phenylalanine (13C9, 99%; 15N, 99%) | 176.1 |

| proline_13C_15N | L-Proline (13C5, 99%; 15N, 99%) | 122.1 |

| serine_13C_15N | L-Serine (13C3, 99%; 15N, 99%) | 110.1 |

| threonine_13C_15N | L-Threonine (13C4, 99%; 15N, 99%) | 125.1 |

| tyrosine_13C_15N | L-Tyrosine (13C9, 99%; 15N, 99%) | 192.1 |

| valine_13C_15N | L-Valine (13C5, 99%; 15N, 99%) | 124.1 |

| rt | |

|---|---|

| carnitine_d3 | 61 |

| creatinine_methyl_d3 | 126 |

| glucose_d2 | 166 |

| alanine_13C_15N | 167 |

| arginine_13C_15N | 183 |

| aspartic_13C_15N | 179 |

| cystine_13C_15N | 209 |

| glutamic_13C_15N | 171 |

| glycine_13C_15N | 168 |

| histidine_13C_15N | 185 |

| isoleucine_13C_15N | 154 |

| leucine_13C_15N | 151 |

| lysine_13C_15N | 184 |

| methionine_13C_15N | 161 |

| phenylalanine_13C_15N | 153 |

| proline_13C_15N | 167 |

| serine_13C_15N | 178 |

| threonine_13C_15N | 171 |

| tyrosine_13C_15N | 166 |

| valine_13C_15N | 164 |

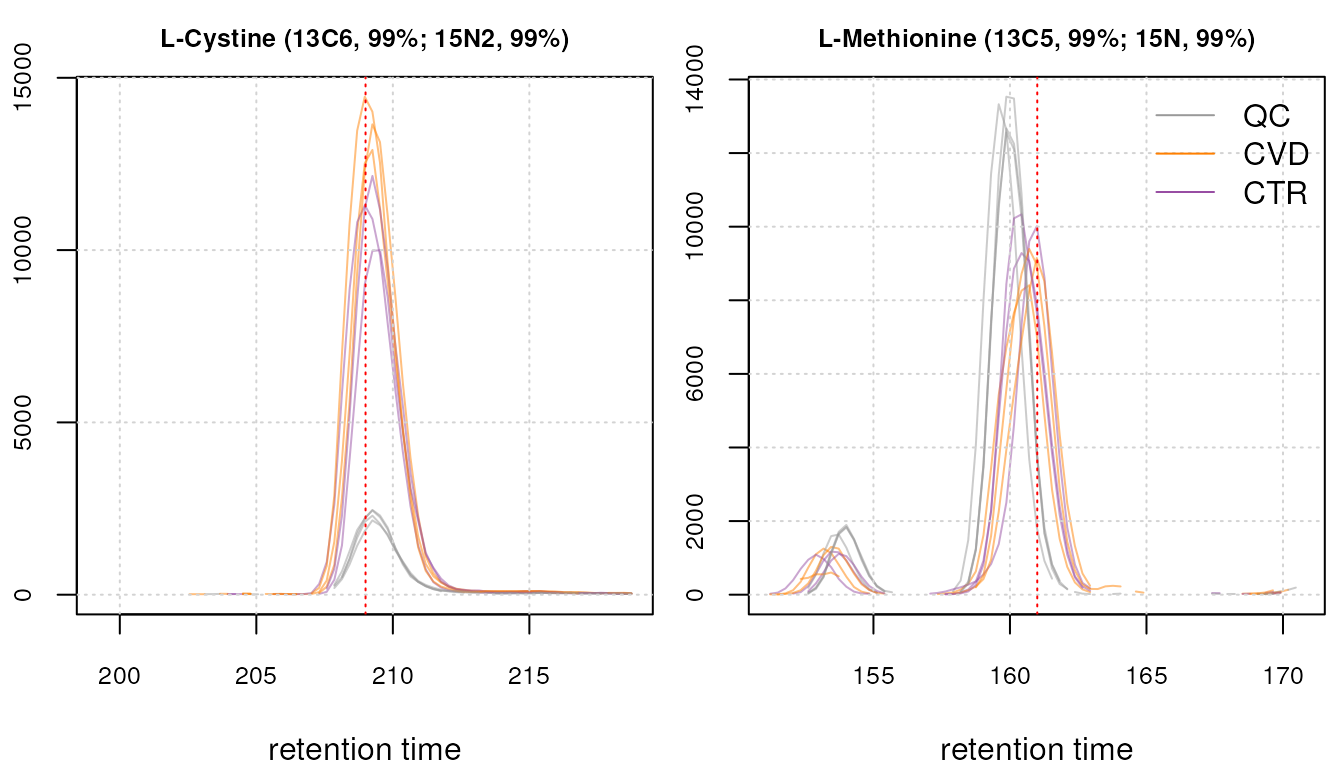

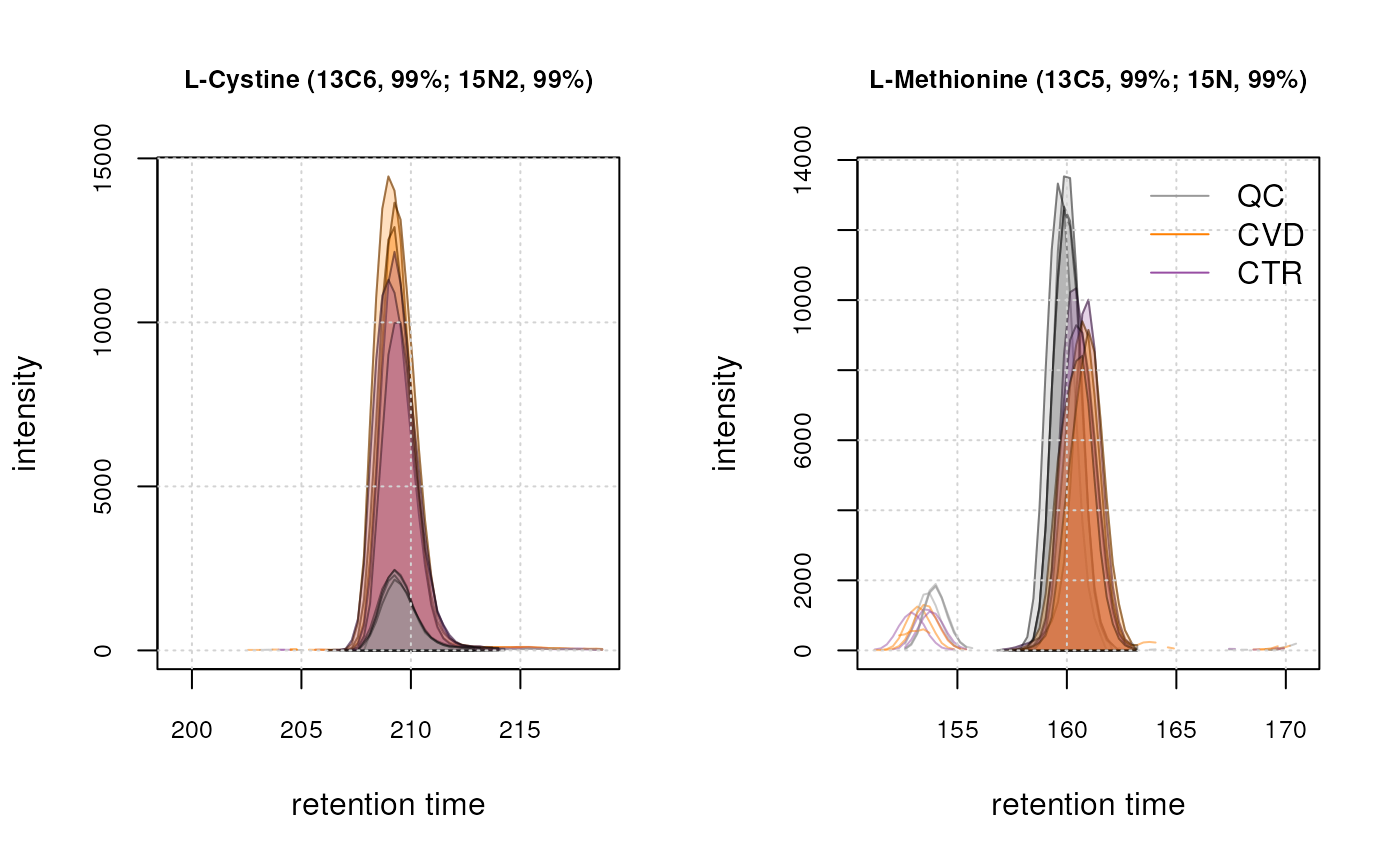

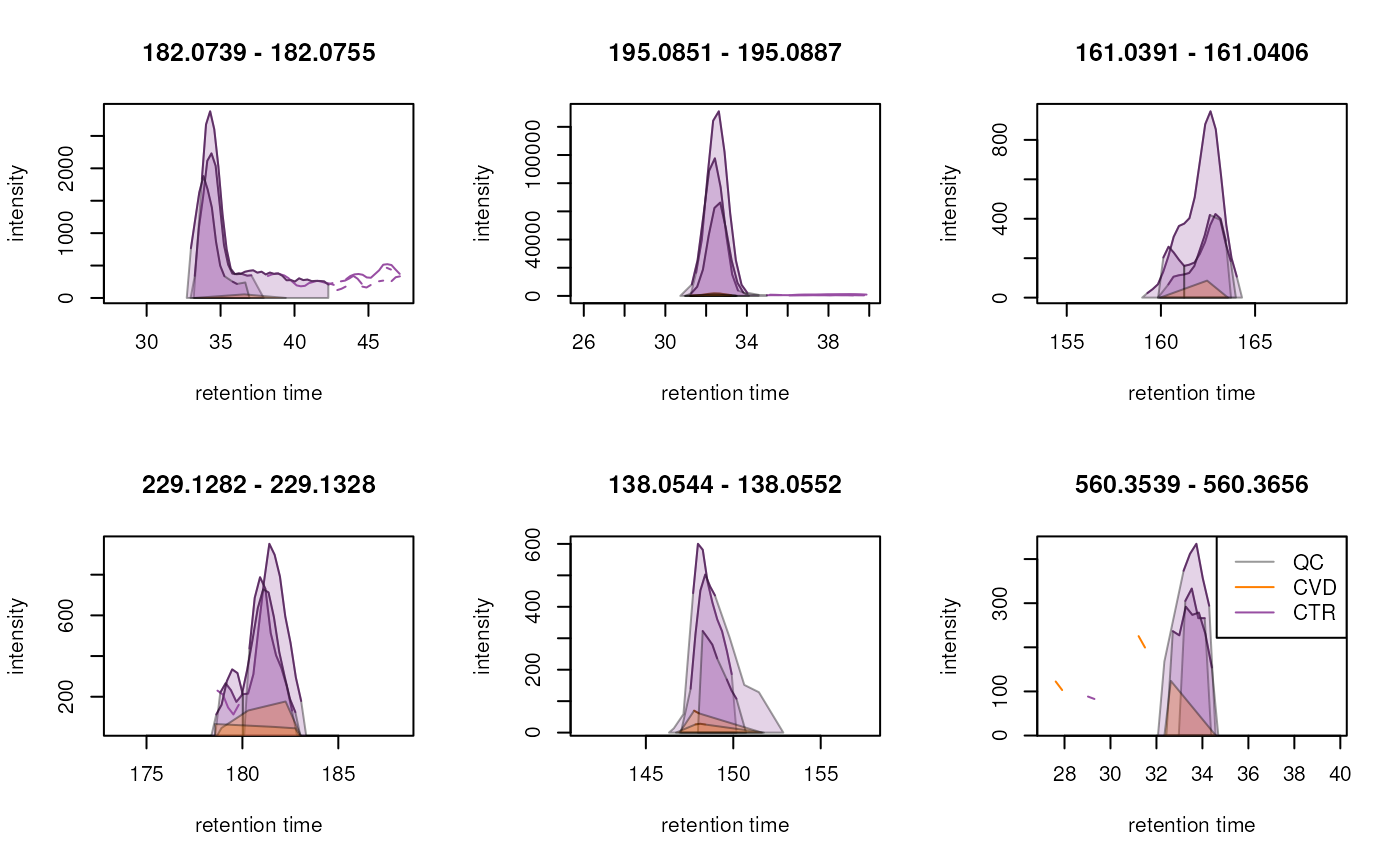

We below plot the EICs for isotope labeled cystine and methionine.

#' Extract the two IS from the chromatogram object.

eic_cystine <- eic_is["cystine_13C_15N"]

eic_met <- eic_is["methionine_13C_15N"]

#' plot both EIC

par(mfrow = c(1, 2), mar = c(4, 2, 2, 0.5))

plot(eic_cystine, main = fData(eic_cystine)$name, cex.axis = 0.8,

cex.main = 0.8,

col = paste0(col_sample, 80))

grid()

abline(v = fData(eic_cystine)$rt, col = "red", lty = 3)

plot(eic_met, main = fData(eic_met)$name, cex.axis = 0.8, cex.main = 0.8,

col = paste0(col_sample, 80))

grid()

abline(v = fData(eic_met)$rt, col = "red", lty = 3)

legend("topright", col = col_phenotype, legend = names(col_phenotype), lty = 1,

bty = "n")

We can observe a clear concentration difference between QCs and study samples for the isotope labeled cystine ion. Meanwhile, the labeled methionine internal standard exhibits a discernible signal amidst some noise and a noticeable retention time shift between samples.

While the artificially isotope labeled compounds were spiked to the

individual samples, there should also be the signal from the endogenous

compounds in serum (or plasma) samples. Thus, we calculate next the mass

and m/z of an [M+H]+ ion of the endogenous cystine

from its chemical formula and extract also the EIC from this ion. For

calculation of the exact mass and the m/z of the selected ion

adduct we use the calculateMass() and

mass2mz() functions from the

r Biocpkg("MetaboCoreUtils") package.

#' extract endogenous cystine mass and EIC and plot.

cysmass <- calculateMass("C6H12N2O4S2")

cys_endo <- mass2mz(cysmass, adduct = "[M+H]+")[, 1]

#' Plot versus spiked

par(mfrow = c(1, 2))

chromatogram(data, mz = cys_endo + c(-0.005, 0.005),

rt = unlist(fData(eic_cystine)[, c("rtmin", "rtmax")]),

aggregationFun = "max") |>

plot(col = paste0(col_sample, 80)) |>

grid()

plot(eic_cystine, col = paste0(col_sample, 80))

grid()

legend("topright", col = col_phenotype, legend = names(col_phenotype), lty = 1,

bty = "n")

The two cystine EICs above look highly similar (the endogenous shown left, the isotope labeled right in the plot above), if not for the shift in m/z, which arises from the artificial labeling. This shift allows us to discriminate between the endogenous and non-endogenous compound.

Data preprocessing

Preprocessing stands as the inaugural step in the analysis of untargeted LC-MS. It is characterized by 3 main stages: chromatographic peak detection, retention time shift correction (alignment) and correspondence which results in features defined. The primary objective of preprocessing is the quantification of signals from ions measured in a sample, addressing any potential retention time drifts between samples, and ensuring alignment of quantified signals across samples within an experiment. The final result of LC-MS data preprocessing is a numeric matrix with abundances of quantified entities in the samples of the experiment.

[anna: silly question: isn’t the goal of preprocessing to align and group all signals pertaining to a certain ion in a feature? And then obtain the matrix with the abundances][phili: I actually really like anna’s simple definition. what do you think ?]

Chromatographic peak detection

The initial preprocessing step involves detecting intensity peaks

along the retention time axis, the so called chromatographic

peaks. To achieve this, we employ the findChromPeaks()

function within xcms. This function supports various algorithms

for peak detection, which can be selected and configured with their

respective parameter objects.

The preferred algorithm in this case, CentWave, utilizes continuous wavelet transformation (CWT)-based peak detection (Tautenhahn, Böttcher, and Neumann 2008). This method is known for its effectiveness in handling non-Gaussian shaped chromatographic peaks or peaks with varying retention time widths, which are commonly encountered in HILIC separations.

Below we apply the CentWave algorithm with its default settings on the extracted ion chromatogram for cystine and methionine ions and evaluate its results.

#' Use default Centwave parameter

param <- CentWaveParam()

#' Look at the default parameters

param## Object of class: CentWaveParam

## Parameters:

## - ppm: [1] 25

## - peakwidth: [1] 20 50

## - snthresh: [1] 10

## - prefilter: [1] 3 100

## - mzCenterFun: [1] "wMean"

## - integrate: [1] 1

## - mzdiff: [1] -0.001

## - fitgauss: [1] FALSE

## - noise: [1] 0

## - verboseColumns: [1] FALSE

## - roiList: list()

## - firstBaselineCheck: [1] TRUE

## - roiScales: numeric(0)

## - extendLengthMSW: [1] FALSE

## - verboseBetaColumns: [1] FALSE

#' Evaluate for Cystine

cystine_test <- findChromPeaks(eic_cystine, param = param)

chromPeaks(cystine_test)## rt rtmin rtmax into intb maxo sn row column

#' Evaluate for Methionine

met_test <- findChromPeaks(eic_met, param = param)

chromPeaks(met_test)## rt rtmin rtmax into intb maxo sn row columnWhile CentWave is a highly performant algorithm, it requires to be costumized to each dataset. This implies that the parameters should be fine-tuned based on the user’s data. The example above serves as a clear motivation for users to familiarize themselves with the various parameters and the need to adapt them to a data set. We will discuss the main parameters that can be easily adjusted to suit the user’s dataset:

peakwidth: Specifies the minimal and maximal expected width of the peaks in the retention time dimension. Highly dependent on the chromatographic settings used.ppm: The maximal allowed difference of mass peaks’ m/z values (in parts-per-million) in consecutive scans to consider them representing signal from the same ion.integrate: This parameter defines the integration method. Here, we primarily useintegrate = 2because it integrates also signal of a chromatographic peak’s tail and is considered more accurate by the developers.

To determine peakwidth, we recommend that users refer to

previous EICs and estimate the range of peak widths they observe in

their dataset. Ideally, examining multiple EICs should be the goal. For

this dataset, the peak widths appear to be around 2 to 10 seconds. We do

not advise choosing a range that is too wide or too narrow with the

peakwidth parameter as it can lead to false positives or

negatives.

To determine the ppm, a deeper analysis of the dataset

is needed. It is clarified above that ppm depends on the

instrument, but users should not necessarily input the vendor-advertised

ppm. Here’s how to determine it as accurately as possible:

The following steps involve generating a highly restricted MS area with a single mass peak per spectrum, representing the cystine ion. The m/z of these peaks is then extracted, their absolute difference calculated and finally expressed in ppm.

#' Restrict the data to signal from cystine in the first sample

cst <- data[1L] |>

spectra() |>

filterRt(rt = c(208, 218)) |>

filterMzRange(mz = fData(eic_cystine)["cystine_13C_15N", c("mzmin", "mzmax")])

#' Show the number of peaks per m/z filtered spectra

lengths(cst)## [1] 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 1 1 1 1 1 1 1 1 1 0 1 1 1 1 1 1

#' Calculate the difference in m/z values between scans

mz_diff <- cst |>

mz() |>

unlist() |>

diff() |>

abs()

#' Express differences in ppm

range(mz_diff * 1e6 / mean(unlist(mz(cst))))## [1] 0.08829605 14.82188728We therefore, choose a value close to the maximum within this range

for parameter ppm, i.e., 15 ppm.

We can now perform the chromatographic peak detection with the

adapted settings on our EICs. It is important to note that, to properly

estimate background noise, sufficient data points outside the

chromatographic peak need to be present. This is generally no problem if

peak detection is performed on the full LC-MS data set, but for peak

detection on EICs the retention time range of the EIC needs to be

sufficiently wide. If the function fails to find a peak in an EIC, the

initial troubleshooting step should be to increase this range.

Additionally, the signal-to-noise threshold snthresh should

be reduced for peak detection in EICs, because within the small

retention time range, not enough signal is present to properly estimate

the background noise. Finally, in case of too few MS1 data points per

peaks, setting CentWave’s advanced parameter

extendLengthMSW to TRUE can help with peak

detection.

#' Parameters adapted for chromatographic peak detection on EICs.

param <- CentWaveParam(peakwidth = c(1, 8), ppm = 15, integrate = 2,

snthresh = 2)

#' Evaluate on the cystine ion

cystine_test <- findChromPeaks(eic_cystine, param = param)

chromPeaks(cystine_test)## rt rtmin rtmax into intb maxo sn row column

## [1,] 209.251 207.577 212.878 4085.675 2911.376 2157.459 4 1 1

## [2,] 209.251 206.182 213.995 24625.728 19074.407 12907.487 4 1 2

## [3,] 209.252 207.020 214.274 19467.836 14594.041 9996.466 4 1 3

## [4,] 209.251 207.577 212.041 4648.229 3202.617 2458.485 3 1 4

## [5,] 208.974 206.184 213.159 23801.825 18126.978 11300.289 3 1 5

## [6,] 209.250 207.018 213.714 25990.327 21036.768 13650.329 5 1 6

## [7,] 209.252 207.857 212.879 4528.767 3259.039 2445.841 4 1 7

## [8,] 209.252 207.299 213.995 23119.449 17274.140 12153.410 4 1 8

## [9,] 208.972 206.740 212.878 28943.188 23436.119 14451.023 4 1 9

## [10,] 209.252 207.578 213.437 4470.552 3065.402 2292.881 4 1 10

#' Evaluate on the methionine ion

met_test <- findChromPeaks(eic_met, param = param)

chromPeaks(met_test)## rt rtmin rtmax into intb maxo sn row column

## [1,] 159.867 157.913 162.378 20026.61 14715.42 12555.601 4 1 1

## [2,] 160.425 157.077 163.215 16827.76 11843.39 8407.699 3 1 2

## [3,] 160.425 157.356 163.215 18262.45 12881.67 9283.375 3 1 3

## [4,] 159.588 157.635 161.820 20987.72 15424.25 13327.811 4 1 4

## [5,] 160.985 156.799 163.217 16601.72 11968.46 10012.396 4 1 5

## [6,] 160.982 157.634 163.214 17243.24 12389.94 9150.079 4 1 6

## [7,] 159.867 158.193 162.099 21120.10 16202.05 13531.844 3 1 7

## [8,] 160.426 157.356 162.937 18937.40 13739.73 10336.000 3 1 8

## [9,] 160.704 158.472 163.215 17882.21 12299.43 9395.548 3 1 9

## [10,] 160.146 157.914 162.379 20275.80 14279.50 12669.821 3 1 10With the customized parameters, a chromatographic peak was detected

in each sample. Below, we use the plot() function on the

EICs to visualize these results.

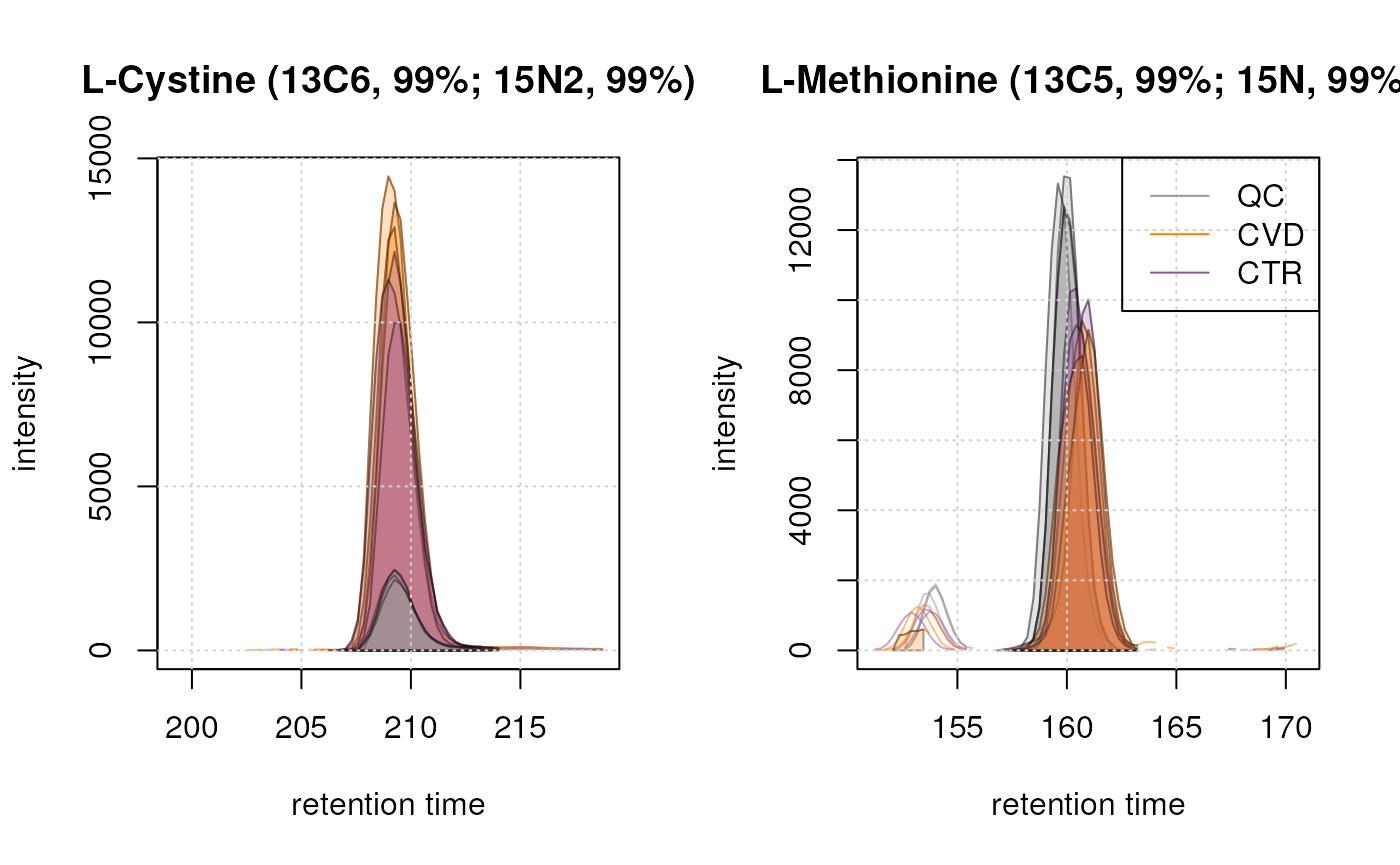

We can see a peak seems ot be detected in each sample for both ions. This indicates that our custom settings seem thus to be suitable for our dataset. We now proceed and apply them to the entire dataset, extracting EICs again for the same ions to evaluate and confirm that chromatographic peak detection worked as expected. Note:

We revert the value for parameter

snthreshto its default, because, as mentioned above, background noise estimation is more reliable when performed on the full data set.Parameter

chunkSizeoffindChromPeaks()defines the number of data files that are loaded into memory and processed simultaneously. This parameter thus allows to fine-tune the memory demand as well as performance of the chromatographic peak detection step.

#' Using the same settings, but with default snthresh

param <- CentWaveParam(peakwidth = c(1, 8), ppm = 15, integrate = 2)

data <- findChromPeaks(data, param = param, chunkSize = 5)

#' Update EIC internal standard object

eics_is_noprocess <- eic_is

eic_is <- chromatogram(data,

rt = as.matrix(intern_standard[, c("rtmin", "rtmax")]),

mz = as.matrix(intern_standard[, c("mzmin", "mzmax")]))

fData(eic_is) <- fData(eics_is_noprocess)Below we plot the EICs of the two selected internal standards to evaluate the chromatographic peak detection results.

Peaks seem to have been detected properly in all samples for both ions. This indicates that the peak detection process over the entire dataset was successful.

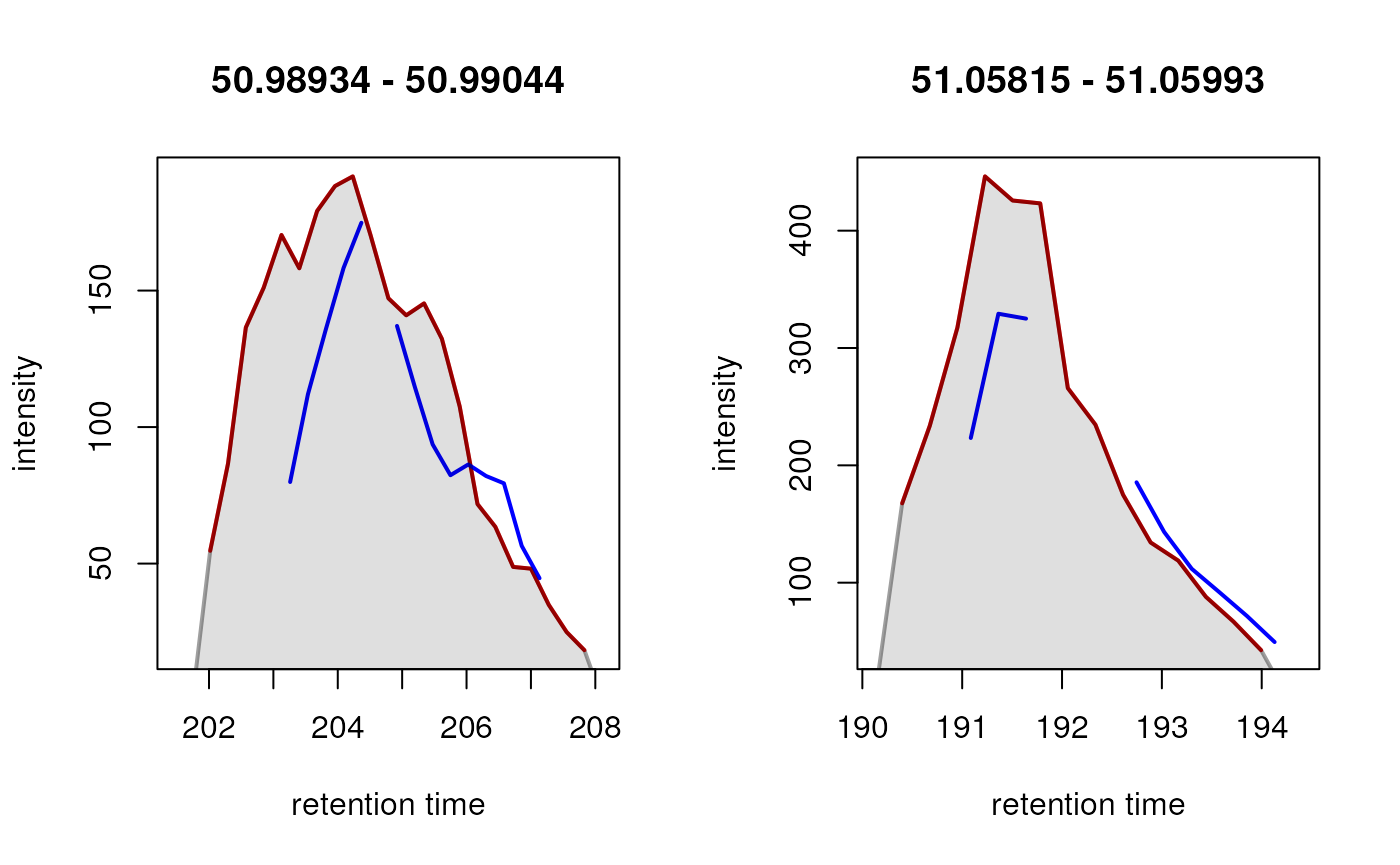

Refine identified chromatographic peaks

The identification of chromatographic peaks using the

CentWave algorithm can sometimes result in artifacts, such as

overlapping or split peaks. To address this issue, the

refineChromPeaks() function is utilized, in conjunction

with MergeNeighboringPeaksParam, which aims at merging such

split peaks.

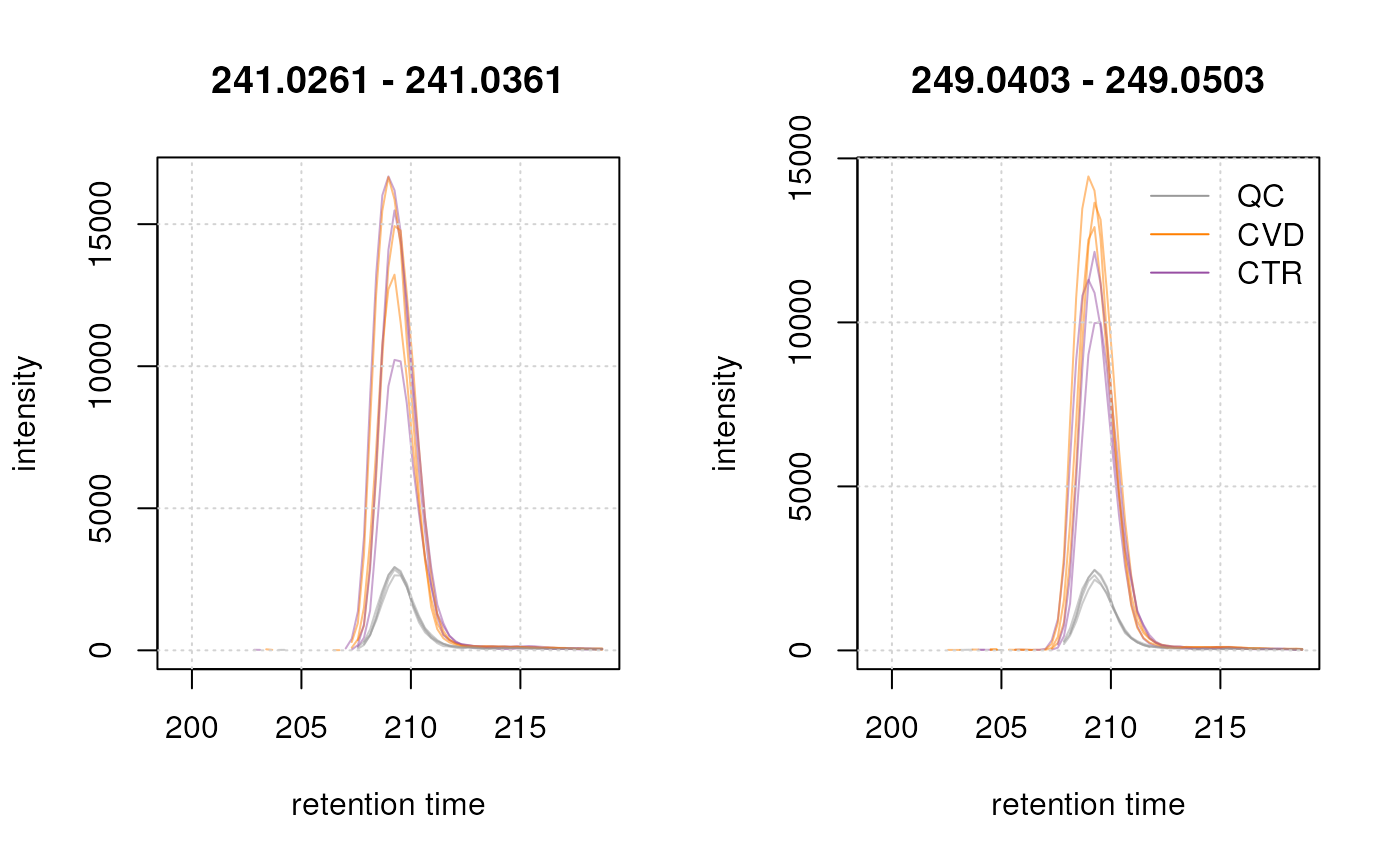

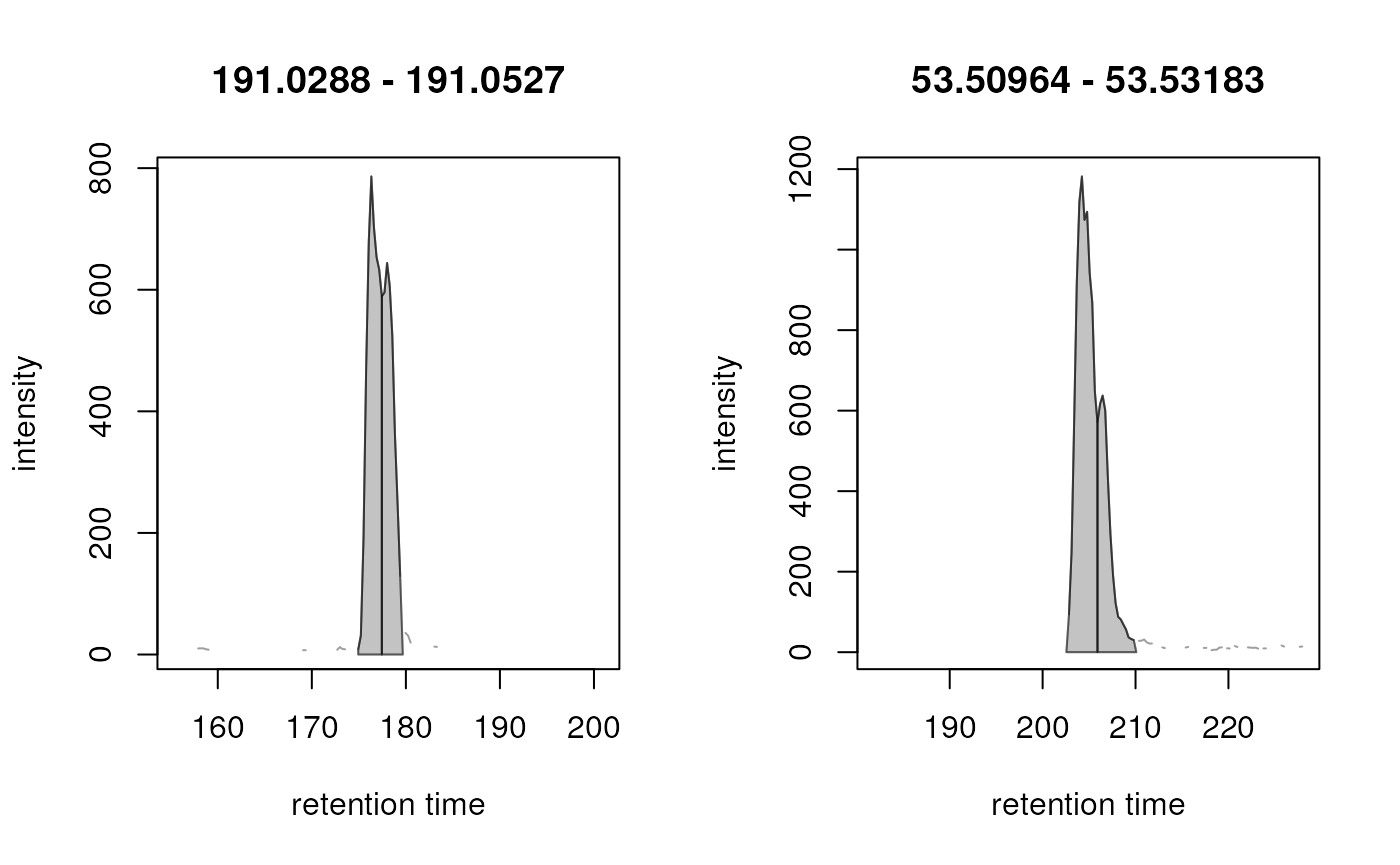

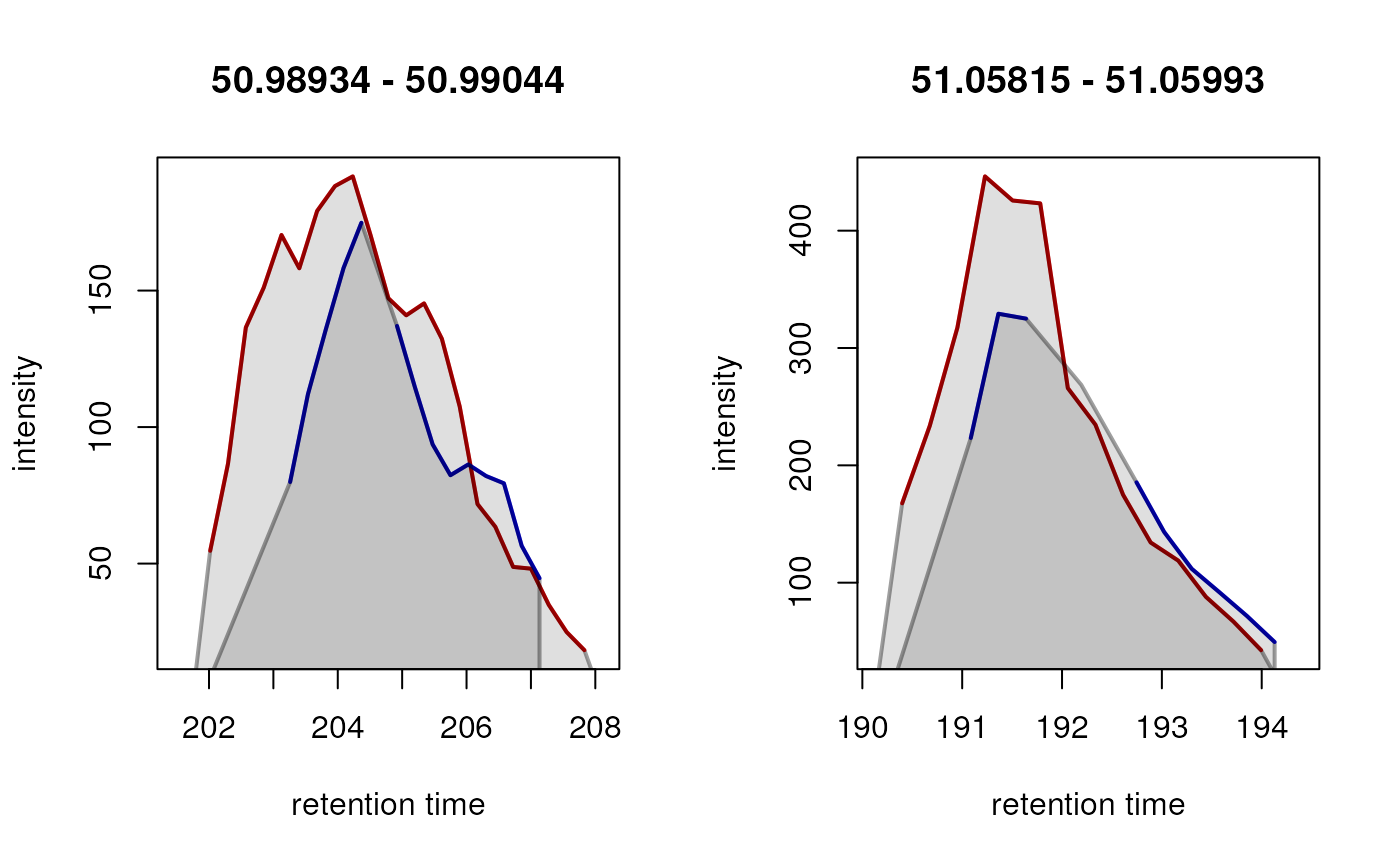

Below we show some examples of CentWave peak detection artifacts. These examples are pre-selected to illustrate the necessity of the next step:

In both cases the signal presumably from a single type of ion was

split into two separate chromatographic peaks (indicated by the vertical

line). The MergeNeigboringPeaksParam allows to combine such

split peaks. The parameters for this algorithm are defined below:

-

expandMzandexpandRt: Define which chromatographic peaks should be evaluated for merging.-

expandMz: Suggested to be kept relatively small (here at 0.0015) to prevent the merging of isotopes. -

expandRt: Usually set to approximately half the size of the average retention time width used for chromatographic peak detection (in this case, 2.5 seconds).

-

-

minProp: Used to determine whether candidates will be merged. Chromatographic peaks with overlapping m/z ranges (expanded on each side byexpandMz) and with a tail-to-head distance in the retention time dimension that is less than2 * expandRt, and for which the signal between them is higher thanminPropof the apex intensity of the chromatographic peak with the lower intensity, are merged. Values for this parameter should not be too small to avoid merging closely co-eluting ions, such as isomers.

We test these settings below on the EICs with the split peaks.

#' set up the parameter

param <- MergeNeighboringPeaksParam(expandRt = 2.5, expandMz = 0.0015,

minProp = 0.75)

#' Perform the peak refinement on the EICs

eics <- refineChromPeaks(eics, param = param)

plot(eics)

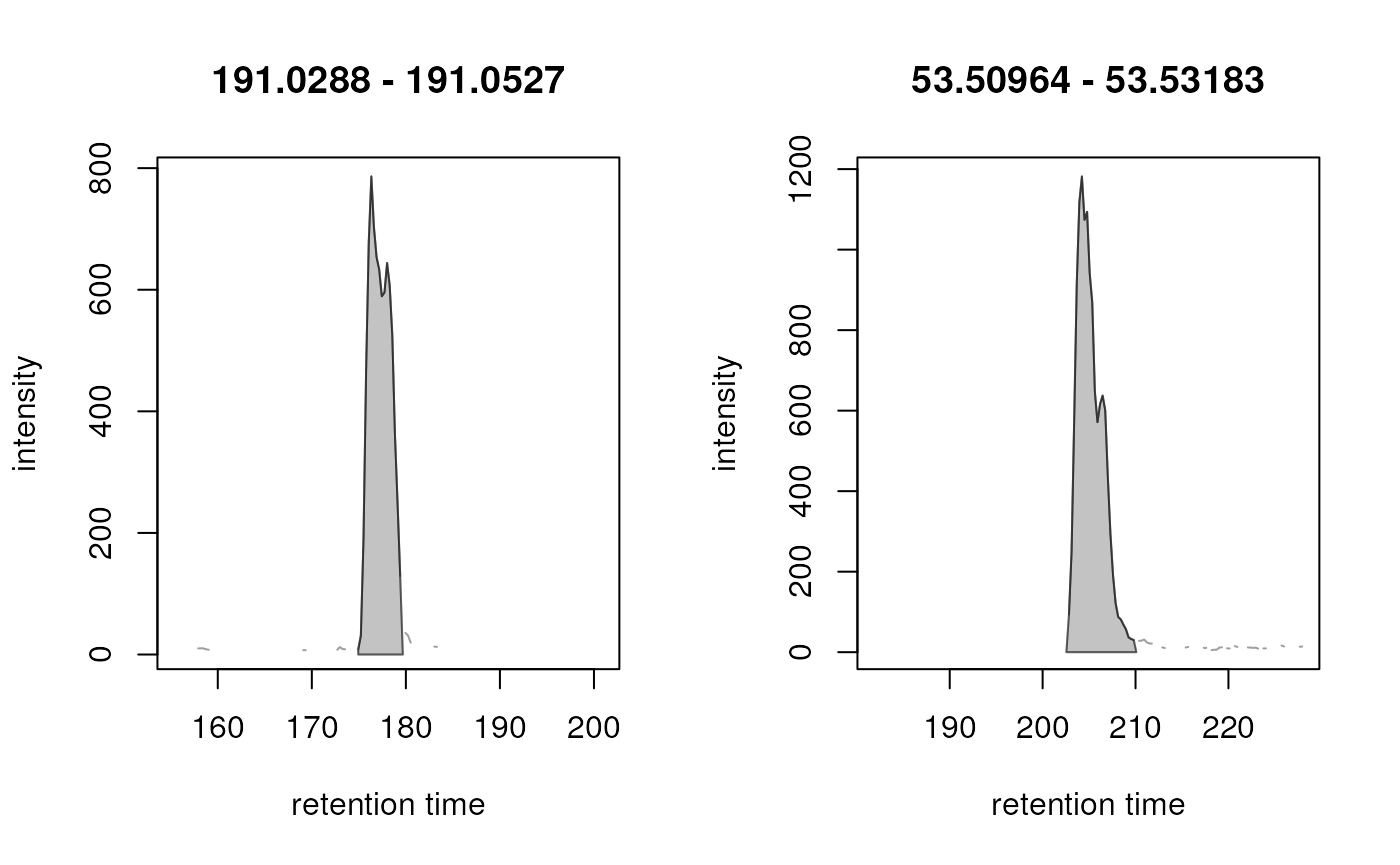

We can observe that the artificially split peaks have been

appropriately merged. Therefore, we next apply these settings to the

entire dataset. After peak merging, column "merged" in the

result object’s chromPeakData() data frame can be used to

evaluate which chromatographic peaks in the result represent signal from

merged, or from the originally identified chromatographic peaks.

#' Apply on whole dataset

data <- refineChromPeaks(data, param = param, chunkSize = 5)

chromPeakData(data)$merged |>

table()##

## FALSE TRUE

## 79908 9274Before proceeding with the next preprocessing step it is generally suggested to evaluate the results of the chromatographic peak detection on EICs of e.g. internal standards or other compounds/ions known to be present in the samples.

eics_is_chrompeaks <- eic_is

eic_is <- chromatogram(data,

rt = as.matrix(intern_standard[, c("rtmin", "rtmax")]),

mz = as.matrix(intern_standard[, c("mzmin", "mzmax")]))

fData(eic_is) <- fData(eics_is_chrompeaks)

eic_cystine <- eic_is["cystine_13C_15N", ]

eic_met <- eic_is["methionine_13C_15N", ]Additionally, evaluating and comparing the number of identified chromatographic peaks in all samples of a data set can help spotting potentially problematic samples. Below we count the number of chromatographic peaks per sample and show these numbers in a table.

#' Count the number of peaks per sample and summarize them in a table.

data.frame(sample_name = sampleData(data)$sample_name,

peak_count = as.integer(table(chromPeaks(data)[, "sample"]))) |>

pandoc.table(

style = "rmarkdown",

caption = "Table 4.Samples and number of identified chromatographic peaks.")| sample_name | peak_count |

|---|---|

| POOL1 | 9287 |

| A | 8986 |

| B | 8738 |

| POOL2 | 9193 |

| C | 8351 |

| D | 8778 |

| POOL3 | 9211 |

| E | 8787 |

| F | 8515 |

| POOL4 | 9336 |

A similar number of chromatographic peaks was identified within the various samples of the data set.

Additional options to evaluate the results of the chromatographic

peak detection can be implemented using the

plotChromPeaks() function or by summarizing the results

using base R commands.

Retention time alignment

Despite using the same chromatographic settings and conditions retention time shifts are unavoidable. Indeed, the performance of the instrument can change over time, for example due to small variations in environmental conditions, such as temperature and pressure. These shifts will be generally small if samples are measured within the same batch/measurement run, but can be considerable if data of an experiment was acquired across a longer time period.

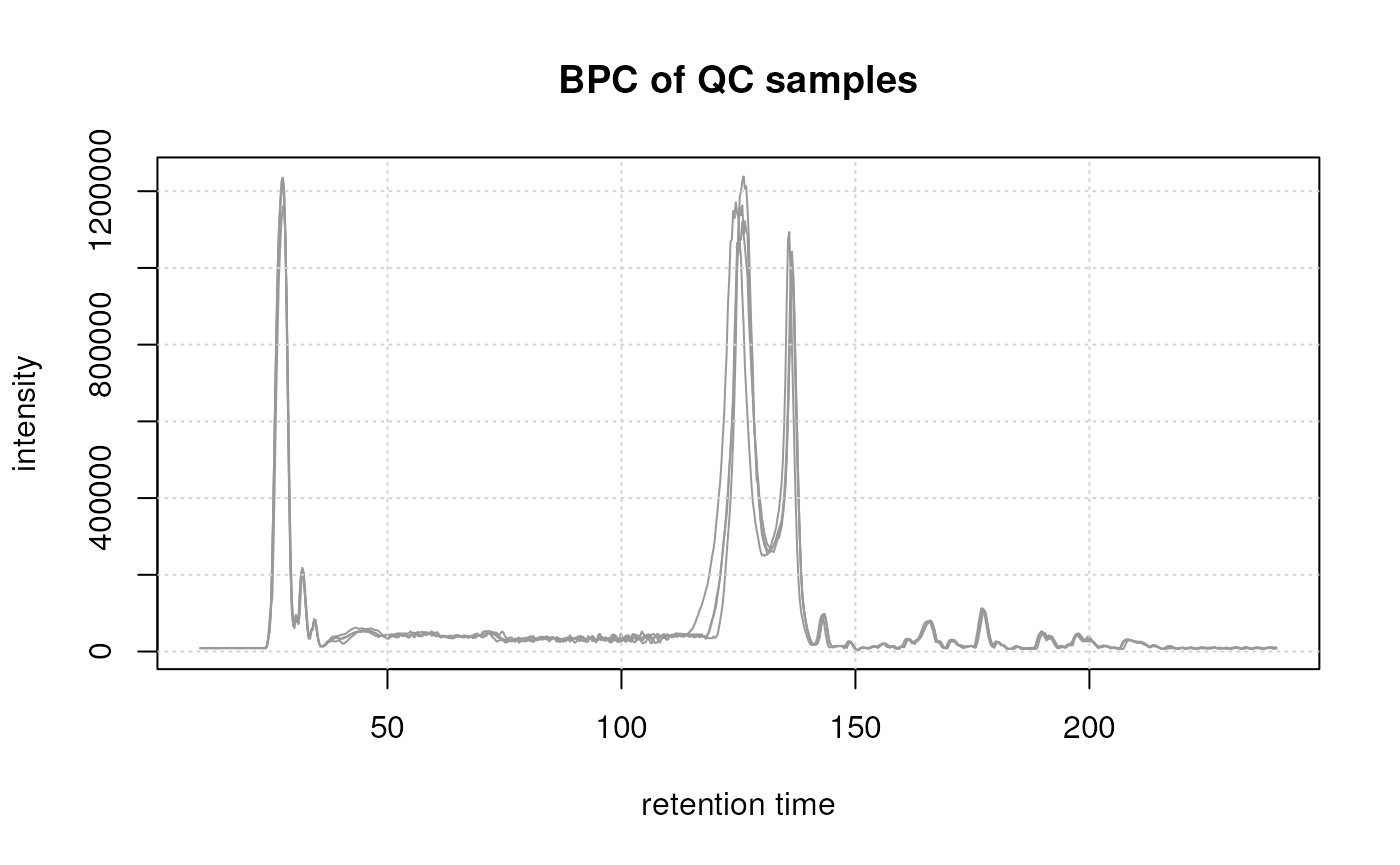

To evaluate the presence of a shift we extract and plot below the BPC from the QC samples.

#' Get QC samples

QC_samples <- sampleData(data)$phenotype == "QC"

#' extract BPC

data[QC_samples] |>

chromatogram(aggregationFun = "max", chromPeaks = "none") |>

plot(col = col_phenotype["QC"], main = "BPC of QC samples") |>

grid()

These QC samples representing the same sample (pool) were measured at regular intervals during the measurement run of the experiment and were all measured on the same day. Still, small shifts can be observed, especially in the region between 100 and 150 seconds. To facilitate proper correspondence of signals across samples (and hence definition of the LC-MS features), it is essential to minimize these differences in retention times.

Theoretically, we proceed in two steps: first we select only the QC samples of our dataset and do a first alignment on these, using the so-called anchor peaks. In this way we can assume a linear shift in time, since we are always measuring the same sample in different regular time intervals. Despite having external QCs in our data set, we still use the subset-based alignment assuming retention time shifts to be independent of the different sample matrix (human serum or plasma) and instead are mostly instrument-dependent. Note that it would also be possible to manually specify anchor peaks, respectively their retention times or to align a data set against an external, reference, data set. More information is provided in the vignettes of the xcms package.

After calculating how much to adjust the retention time in these samples, we apply this shift also on the study samples.

In xcms retention time alignment can be performed using the

adjustRtime() function with an alignment algorithm. For

this example we use the PeakGroups method (Smith et al. 2006) that performs the alignment

by minimizing differences in retention times of a set of anchor

peaks in the different samples. This method requires an initial

correspondence analysis to match/group chromatographic peaks across

samples from which the algorithm then selects the anchor peaks for the

alignment.

For the initial correspondence, we use the PeakDensity

approach (Smith et al. 2006) that groups

chromatographic peaks with similar m/z and retention time into

LC-MS features. The parameters for this algorithm, that can be

configured using the PeakDensityParam object, are

sampleGroups, minFraction,

binSize, ppm and bw.

binSize, ppm and bw allow to

specify how similar the chromatographic peaks’ m/z and

retention time values need to be to consider them for grouping into a

feature.

binSizeandppmdefine the required similarity of m/z values. Within each m/z bin (defined bybinSizeandppm) areas along the retention time axis with a high chromatographic peak density (considering all peaks in all samples) are identified, and chromatographic peaks within these regions are considered for grouping into a feature.High density areas are identified using the base R

density()function, for whichbwis a parameter: higher values define wider retention time areas, lower values require chromatographic peaks to have more similar retention times. This parameter can be seen as the black line on the plot below, corresponding to the smoothness of the density curve.

Whether such candidate peaks get grouped into a feature depends also

on parameters sampleGroups and

minFraction:

sampleGroupsshould provide, for each sample, the sample group it belongs to.minFractionis expected to be a value between 0 and 1 defining the proportion of samples within at least one of the sample groups (defined withsampleGroups) in which a chromatographic peaks was detected to group them into a feature.

For the initial correspondence, parameters don’t need to be fully

optimized. Selection of dataset-specific parameter values is described

in more detail in the next section. For our dataset, we use small values

for binSize and ppm and, importantly, also for

parameter bw, since for our data set an ultra high

performance (UHP) LC setup was used [anna: maybe I have been out of this

field for too long, but I don’t see the connection between UHPLC and

choice of small values for these parameters. Is it something empirical?

phili: jo can you help me here ?]. For minFraction we use a

high value (0.9) to ensure only features are defined for chromatographic

peaks present in almost all samples of one sample group (which can then

be used as anchor peaks for the actual alignment). We will base the

alignment later on QC samples only and hence define for

sampleGroups a binary variable grouping samples either into

a study, or QC group.

# Initial correspondence analysis

param <- PeakDensityParam(sampleGroups = sampleData(data)$phenotype == "QC",

minFraction = 0.9,

binSize = 0.01, ppm = 10,

bw = 2)

data <- groupChromPeaks(data, param = param)

plotChromPeakDensity(

eic_cystine, param = param,

col = paste0(col_sample, "80"),

peakCol = col_sample[chromPeaks(eic_cystine)[, "sample"]],

peakBg = paste0(col_sample[chromPeaks(eic_cystine)[, "sample"]], 20),

peakPch = 16)

PeakGroups-based alignment can next be performed using the

adjustRtime() function with a PeakGroupsParam

parameter object. The parameters for this algorithm are:

subsetAdjustandsubset: Allows for subset alignment. Here we base the retention time alignment on the QC samples, i.e., retention time shifts will be estimated based on these repeatedly measured samples. The resulting adjustment is then applied to the entire data. For data sets in which QC samples (e.g. sample pools) are measured repeatedly, we strongly suggest to use this method. Note also that for subset-based alignment the samples should be ordered by injection index (i.e., in the order in which they were measured during the measurement run).minFraction: A value between 0 and 1 defining the proportion of samples (of the full data set, or the data subset defined withsubset) in which a chromatographic peak has to be identified to use it as anchor peak. This is in contrast to thePeakDensityParamwhere this parameter was used to define a proportion within a sample group.span: The PeakGroups method allows, depending on the data, to adjust regions along the retention time axis differently. To enable such local alignments the LOESS function is used and this parameter defines the degree of smoothing of this function. Generally, values between 0.4 and 0.6 are used, however, it is suggested to evaluate alignment results and eventually adapt parameters if the result was not satisfactory.

Below we perform the alignment of our data set based on retention times of anchor peaks defined in the subset of QC samples.

#' Define parameters of choice

subset <- which(sampleData(data)$phenotype == "QC")

param <- PeakGroupsParam(minFraction = 0.9, extraPeaks = 50, span = 0.5,

subsetAdjust = "average",

subset = subset)

#' Perform the alignment

data <- adjustRtime(data, param = param)Alignment adjusted the retention times of all spectra in the data set, as well as the retention times of all identified chromatographic peaks.

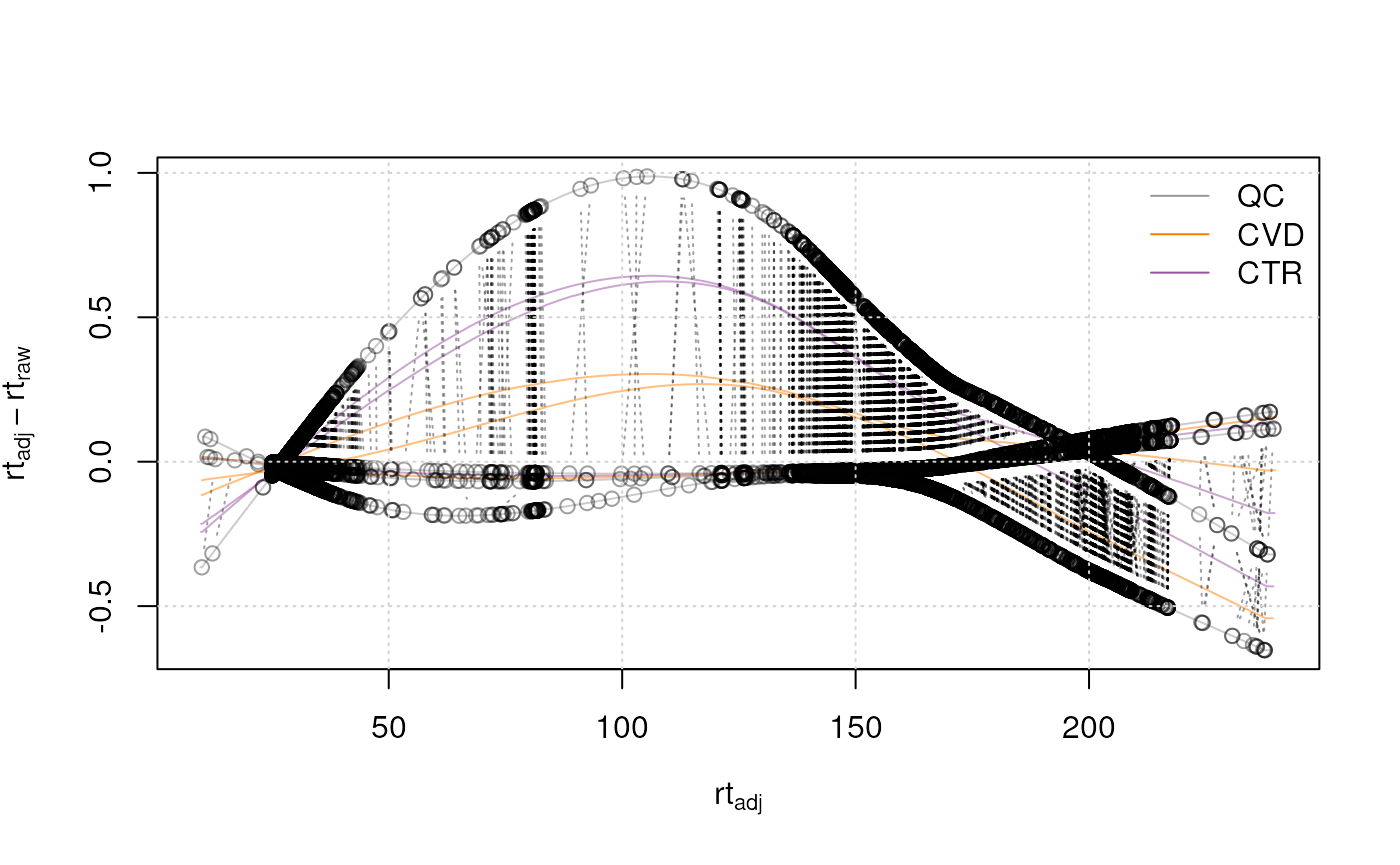

Once the alignment has been performed, the user should evaluate the

results using the plotAdjustedRtime() function. This

function visualizes the difference between adjusted and raw retention

time for each sample on the y-axis along the adjusted retention time on

the x-axis. Dot points represent the position of the used anchor peak

along the retention time axis. For optimal alignment in all areas along

the retention time axis, these anchor peaks should be scattered all over

the retention time dimension.

#' Visualize alignment results

plotAdjustedRtime(data, col = paste0(col_sample, 80), peakGroupsPch = 1)

grid()

legend("topright", col = col_phenotype,

legend = names(col_phenotype), lty = 1, bty = "n")

All samples from the present data set were measured within the same measurement run, resulting in small retention time shifts. Therefore, only little adjustments needed to be performed (shifts of at maximum 1 second as can be seen in the plot above). Generally, the magnitude of adjustment seen in such plots should match the expectation from the analyst.

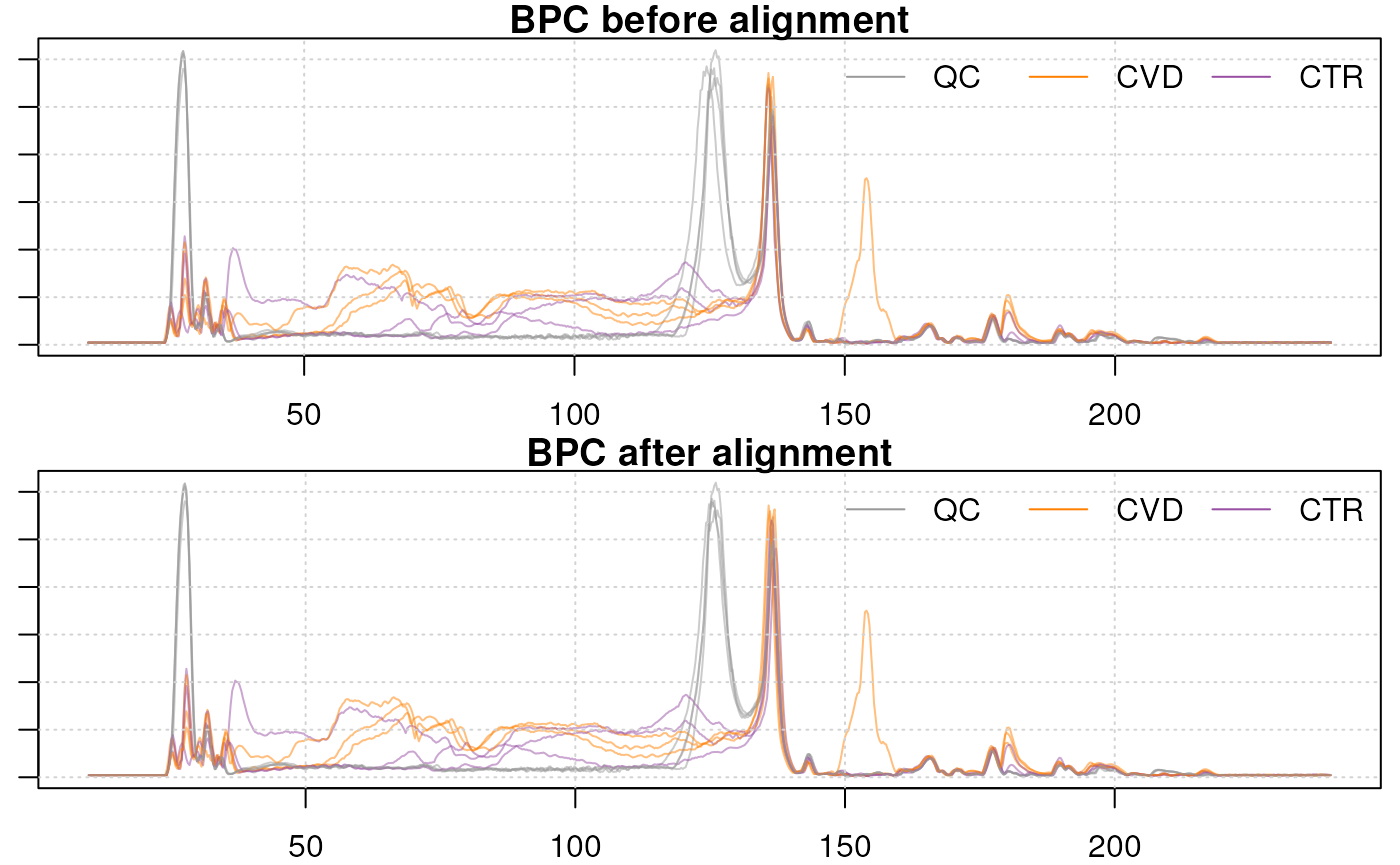

We can also compare the BPC before and after alignment. To get the

original data, i.e. the raw retention times, we can use the

dropAdjustedRtime() function:

#' Get data before alignment

data_raw <- dropAdjustedRtime(data)

#' Apply the adjusted retention time to our dataset

data <- applyAdjustedRtime(data)

#' Plot the BPC before and after alignment

par(mfrow = c(2, 1), mar = c(2, 1, 1, 0.5))

chromatogram(data_raw, aggregationFun = "max", chromPeaks = "none") |>

plot(main = "BPC before alignment", col = paste0(col_sample, 80))

grid()

legend("topright", col = col_phenotype,

legend = names(col_phenotype), lty = 1, bty = "n", horiz = TRUE)

chromatogram(data, aggregationFun = "max", chromPeaks = "none") |>

plot(main = "BPC after alignment",

col = paste0(col_sample, 80))

grid()

legend("topright", col = col_phenotype,

legend = names(col_phenotype), lty = 1, bty = "n", horiz = TRUE)

The largest shift can be observed in the retention time range from 120 to 130s. Apart from that retention time range, only little changes can be observed.

We next evaluate the impact of the alignment on the EICs of the selected internal standards. We thus below first extract the ion chromatograms after alignment.

#' Store the EICs before alignment

eics_is_refined <- eic_is

#' Update the EICs

eic_is <- chromatogram(data,

rt = as.matrix(intern_standard[, c("rtmin", "rtmax")]),

mz = as.matrix(intern_standard[, c("mzmin", "mzmax")]))

fData(eic_is) <- fData(eics_is_refined)

#' Extract the EICs for the test ions

eic_cystine <- eic_is["cystine_13C_15N"]

eic_met <- eic_is["methionine_13C_15N"]We can now evaluate the alignment effect in our test ions. We will plot the EICs before and after alignment for both the isotope labeled cystine and methionine.

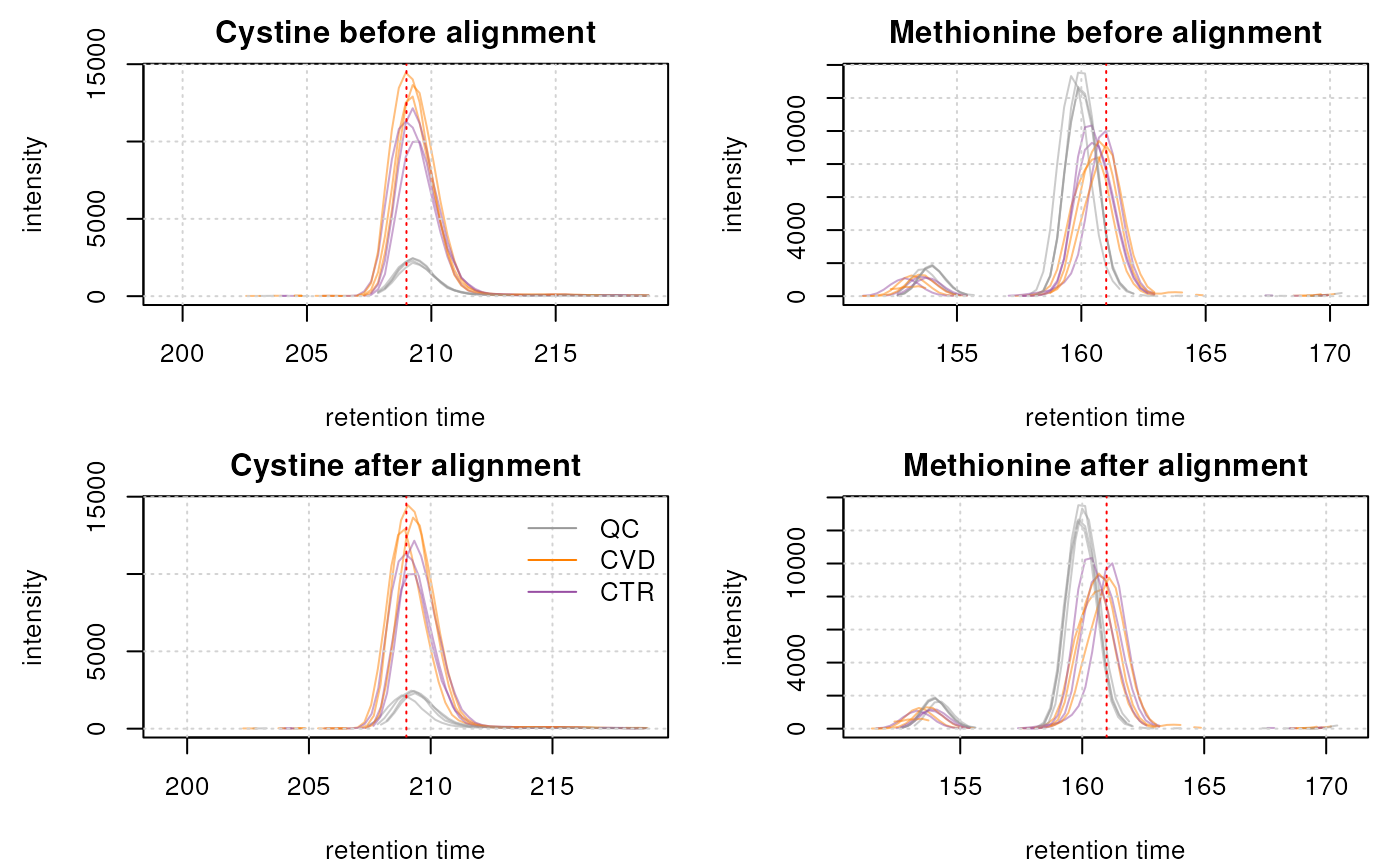

par(mfrow = c(2, 2), mar = c(4, 4.5, 2, 1))

old_eic_cystine <- eics_is_refined["cystine_13C_15N"]

plot(old_eic_cystine, main = "Cystine before alignment", peakType = "none",

col = paste0(col_sample, 80))

grid()

abline(v = intern_standard["cystine_13C_15N", "RT"], col = "red", lty = 3)

old_eic_met <- eics_is_refined["methionine_13C_15N"]

plot(old_eic_met, main = "Methionine before alignment",

peakType = "none", col = paste0(col_sample, 80))

grid()

abline(v = intern_standard["methionine_13C_15N", "RT"], col = "red", lty = 3)

plot(eic_cystine, main = "Cystine after alignment", peakType = "none",

col = paste0(col_sample, 80))

grid()

abline(v = intern_standard["cystine_13C_15N", "RT"], col = "red", lty = 3)

legend("topright", col = col_phenotype,

legend = names(col_phenotype), lty = 1, bty = "n")

plot(eic_met, main = "Methionine after alignment",

peakType = "none", col = paste0(col_sample, 80))

grid()

abline(v = intern_standard["methionine_13C_15N", "RT"], col = "red", lty = 3)

The non-endogenous cystine ion was already well aligned so the difference is minimal. The methionine ion, however, shows an improvement in alignment.

In addition to a visual inspection of the results, we also evaluate

the impact of the alignment by comparing the variance in retention times

for internal standards before and after alignment. To this end, we first

need to identify chromatographic peaks in each sample with an

m/z and retention time close to the expected values for each

internal standard. For this we use the matchValues()

function from the MetaboAnnotation

package (Rainer et al. 2022) using the

MzRtParam method to identify all chromatographic peaks with

similar m/z (+/- 50 ppm) and retention time (+/- 10 seconds) to

the internal standard’s values. With parameters mzColname

and rtColname we specify the column names in the query (our

IS) and target (chromatographic peaks) that contain the m/z and

retention time values on which to match entities. We perform this

matching separately for each sample. For each internal standard in every

sample, we use the filterMatches() function and the

SingleMatchParam() parameter to select the chromatographic

peak with the highest intensity.

#' Extract the matrix with all chromatographic peaks and add a column

#' with the ID of the chromatographic peak

chrom_peaks <- chromPeaks(data) |> as.data.frame()

chrom_peaks$peak_id <- rownames(chrom_peaks)

#' Define the parameters for the matching and filtering of the matches

p_1 <- MzRtParam(ppm = 50, toleranceRt = 10)

p_2 <- SingleMatchParam(duplicates = "top_ranked", column = "target_maxo",

decreasing = TRUE)

#' Iterate over samples and identify for each the chromatographic peaks

#' with similar m/z and retention time than the onse from the internal

#' standard, and extract among them the ID of the peaks with the

#' highest intensity.

intern_standard_peaks <- lapply(seq_along(data), function(i) {

tmp <- chrom_peaks[chrom_peaks[, "sample"] == i, , drop = FALSE]

mtch <- matchValues(intern_standard, tmp,

mzColname = c("mz", "mz"),

rtColname = c("RT", "rt"),

param = p_1)

mtch <- filterMatches(mtch, p_2)

mtch$target_peak_id

}) |>

do.call(what = cbind)We have now for each internal standard the ID of the chromatographic peak in each sample that most likely represents signal from that ion. We can now extract the retention times for these chromatographic peaks before and after alignment.

#' Define the index of the selected chromatographic peaks in the

#' full chromPeaks matrix

idx <- match(intern_standard_peaks, rownames(chromPeaks(data)))

#' Extract the raw retention times for these

rt_raw <- chromPeaks(data_raw)[idx, "rt"] |>

matrix(ncol = length(data_raw))

#' Extract the adjusted retention times for these

rt_adj <- chromPeaks(data)[idx, "rt"] |>

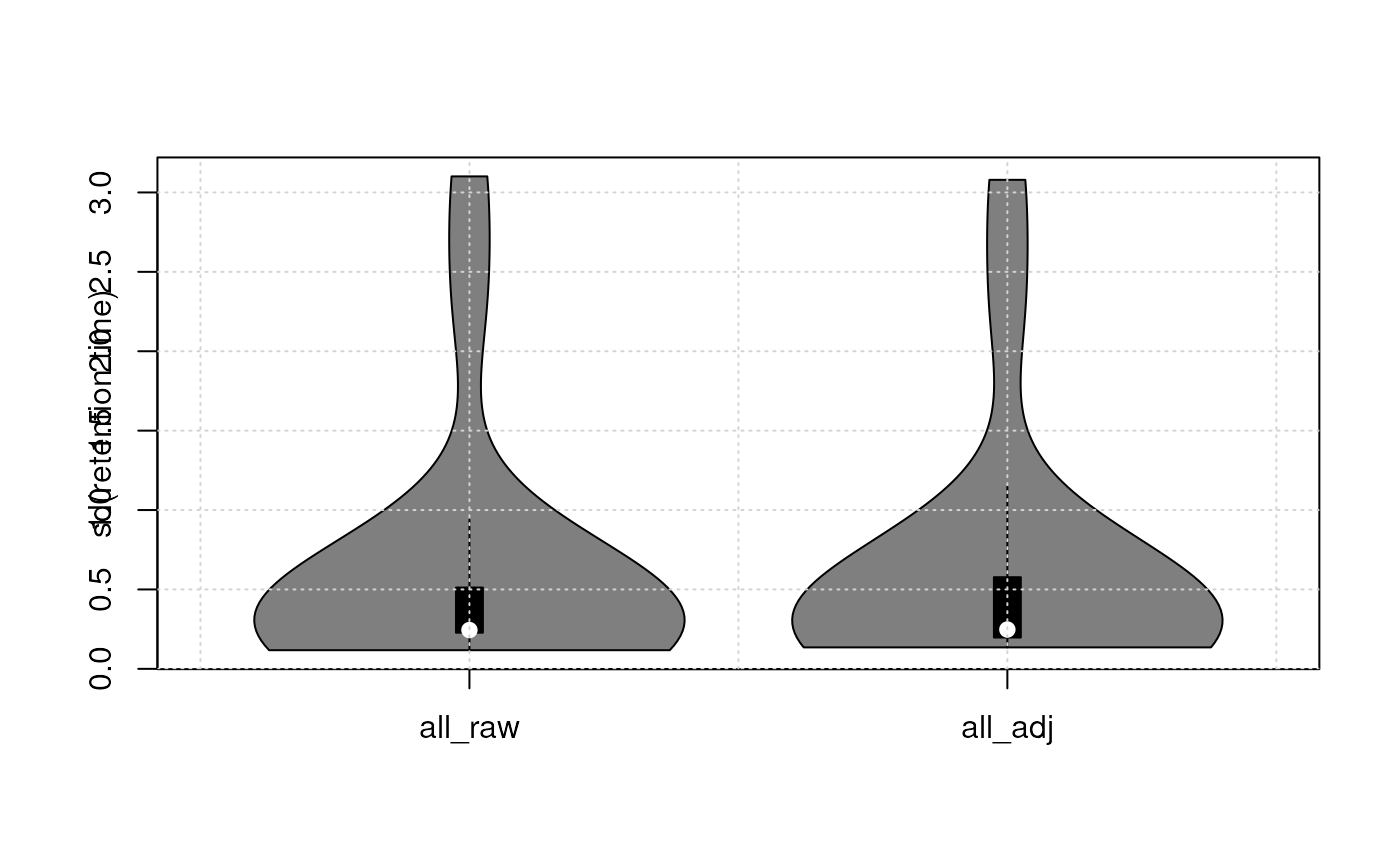

matrix(ncol = length(data_raw))We can now evaluate the impact of the alignment on the retention times of internal standards across the full data set:

list(all_raw = rowSds(rt_raw, na.rm = TRUE),

all_adj = rowSds(rt_adj, na.rm = TRUE)

) |>

vioplot(ylab = "sd(retention time)")

grid()

On average, the variation in retention times of internal standards across samples was slightly reduced by the alignment.[Phili: actually i don’t think we can say that at all from the plot]

Correspondence

We briefly touched on the subject of correspondence before to

determine anchor peaks for alignment. Generally, the goal of the

correspondence analysis is to identify chromatographic peaks that

originate from the same types of ions in all samples of an experiment

and to group them into LC-MS features. At this point, proper

configuration of parameter bw is crucial. Here we

illustrate how sensible choices for this parameter’s value can be made.

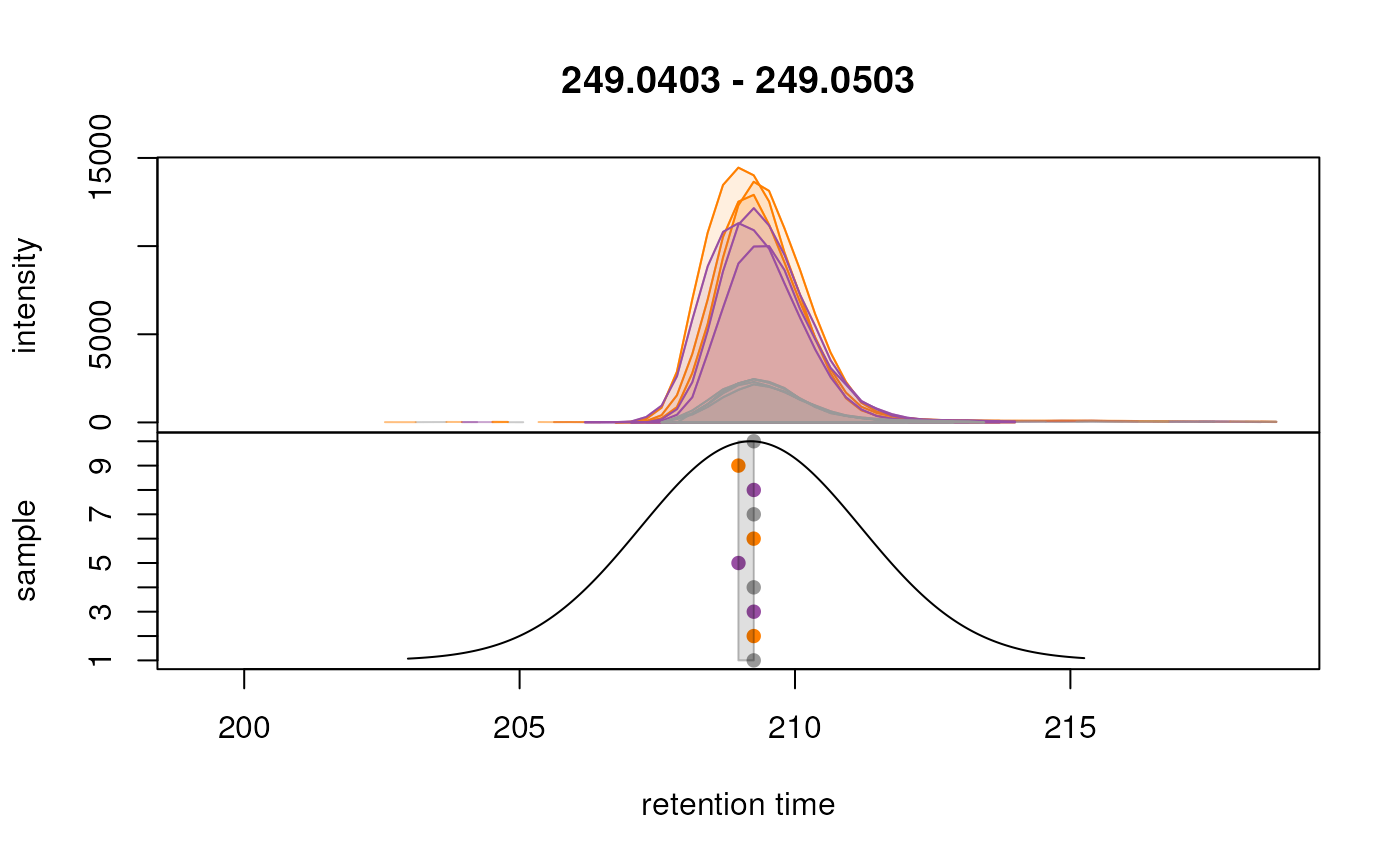

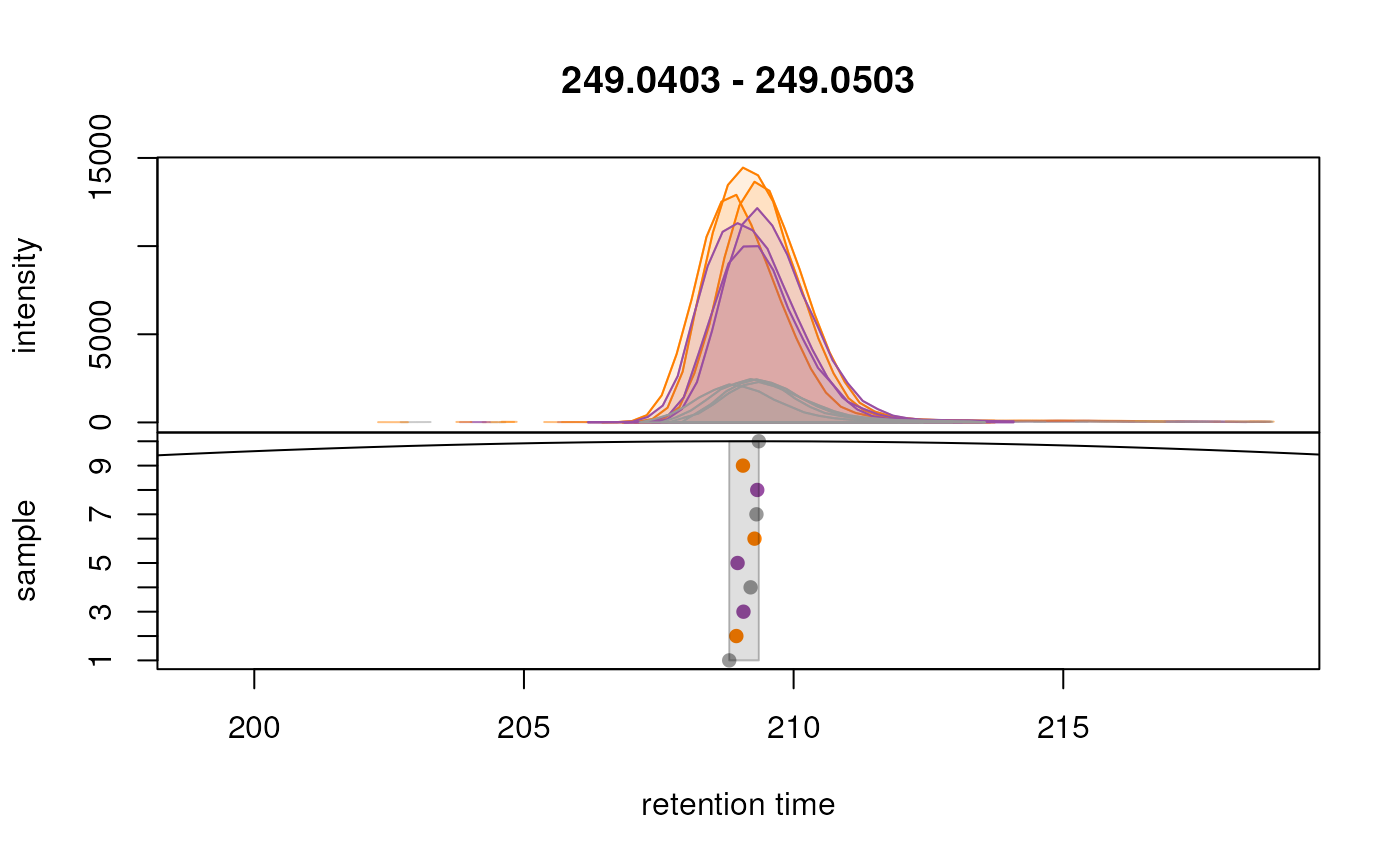

We use below the plotChromPeakDensity() function to

simulate a correspondence analysis with the default values for

PeakGroups on the extracted ion chromatograms of our two

selected isotope labeled ions. This plot shows the EIC in the top panel,

and the apex position of chromatographic peaks in the different samples

(y-axis), along retention time (x-axis) in the lower panel.

#' Default parameter for the grouping and apply them to the test ions BPC

param <- PeakDensityParam(sampleGroups = sampleData(data)$phenotype, bw = 30)

param## Object of class: PeakDensityParam

## Parameters:

## - sampleGroups: [1] "QC" "CVD" "CTR" "QC" "CTR" "CVD" "QC" "CTR" "CVD" "QC"

## - bw: [1] 30

## - minFraction: [1] 0.5

## - minSamples: [1] 1

## - binSize: [1] 0.25

## - maxFeatures: [1] 50

## - ppm: [1] 0

plotChromPeakDensity(

eic_cystine, param = param,

col = paste0(col_sample, "80"),

peakCol = col_sample[chromPeaks(eic_cystine)[, "sample"]],

peakBg = paste0(col_sample[chromPeaks(eic_cystine)[, "sample"]], 20),

peakPch = 16)

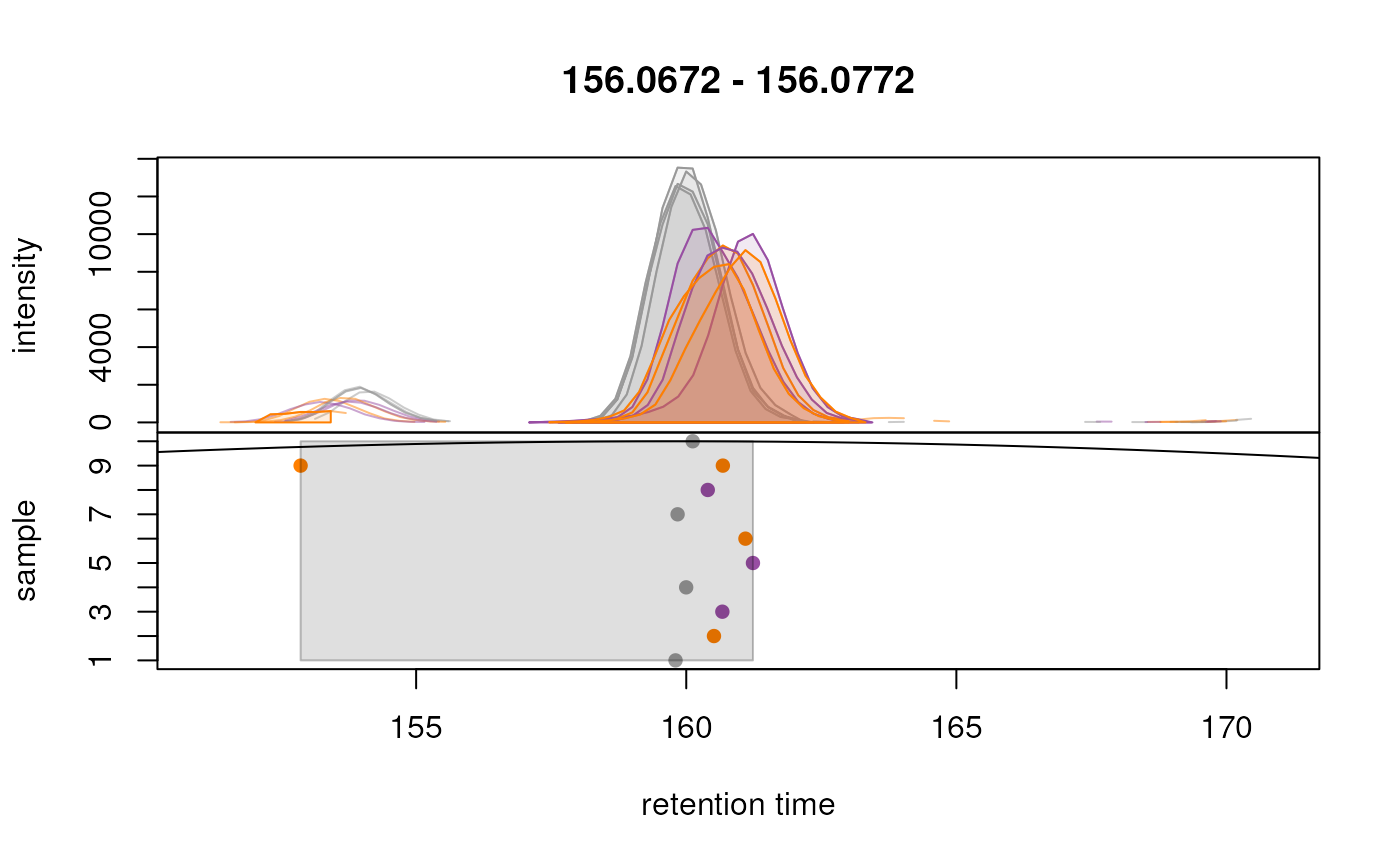

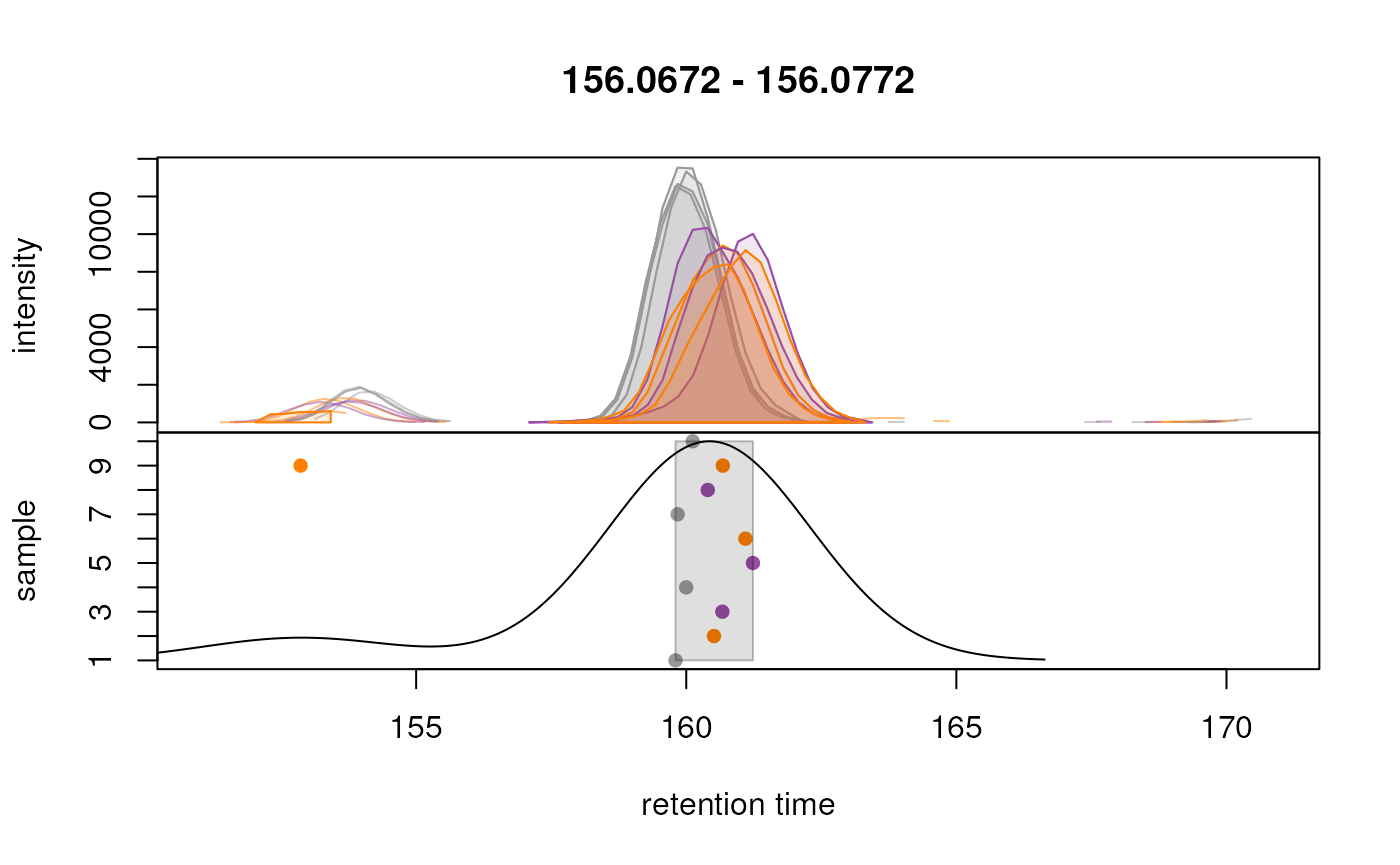

plotChromPeakDensity(eic_met, param = param,

col = paste0(col_sample, "80"),

peakCol = col_sample[chromPeaks(eic_met)[, "sample"]],

peakBg = paste0(col_sample[chromPeaks(eic_met)[, "sample"]], 20),

peakPch = 16)

Grouping of peaks depends on the smoothness of the previousl

mentionned density curve that can be configured with the parameter

bw. As seen above, the smoothness is too high to properly

group our features. When looking at the default parameters, we can

observe that indeed, the bw parameter is set to

bw = 30, which is too high for modern UHPLC-MS setups. We

reduce the value of this parameter to 1.8 and evaluate its impact.

#' Updating parameters

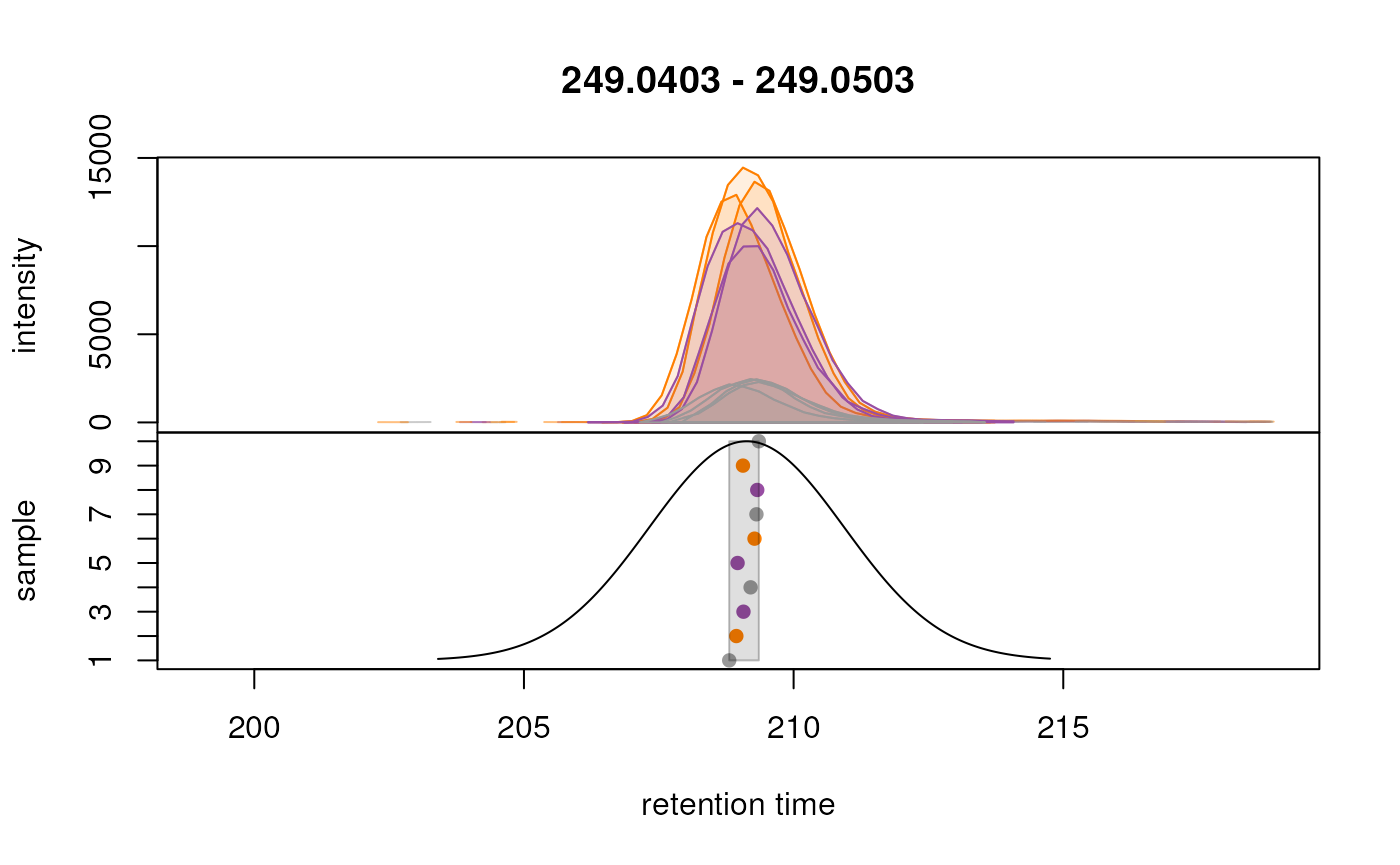

param <- PeakDensityParam(sampleGroups = sampleData(data)$phenotype, bw = 1.8)

plotChromPeakDensity(

eic_cystine, param = param,

col = paste0(col_sample, "80"),

peakCol = col_sample[chromPeaks(eic_cystine)[, "sample"]],

peakBg = paste0(col_sample[chromPeaks(eic_cystine)[, "sample"]], 20),

peakPch = 16)

plotChromPeakDensity(eic_met, param = param,

col = paste0(col_sample, "80"),

peakCol = col_sample[chromPeaks(eic_met)[, "sample"]],

peakBg = paste0(col_sample[chromPeaks(eic_met)[, "sample"]], 20),

peakPch = 16)

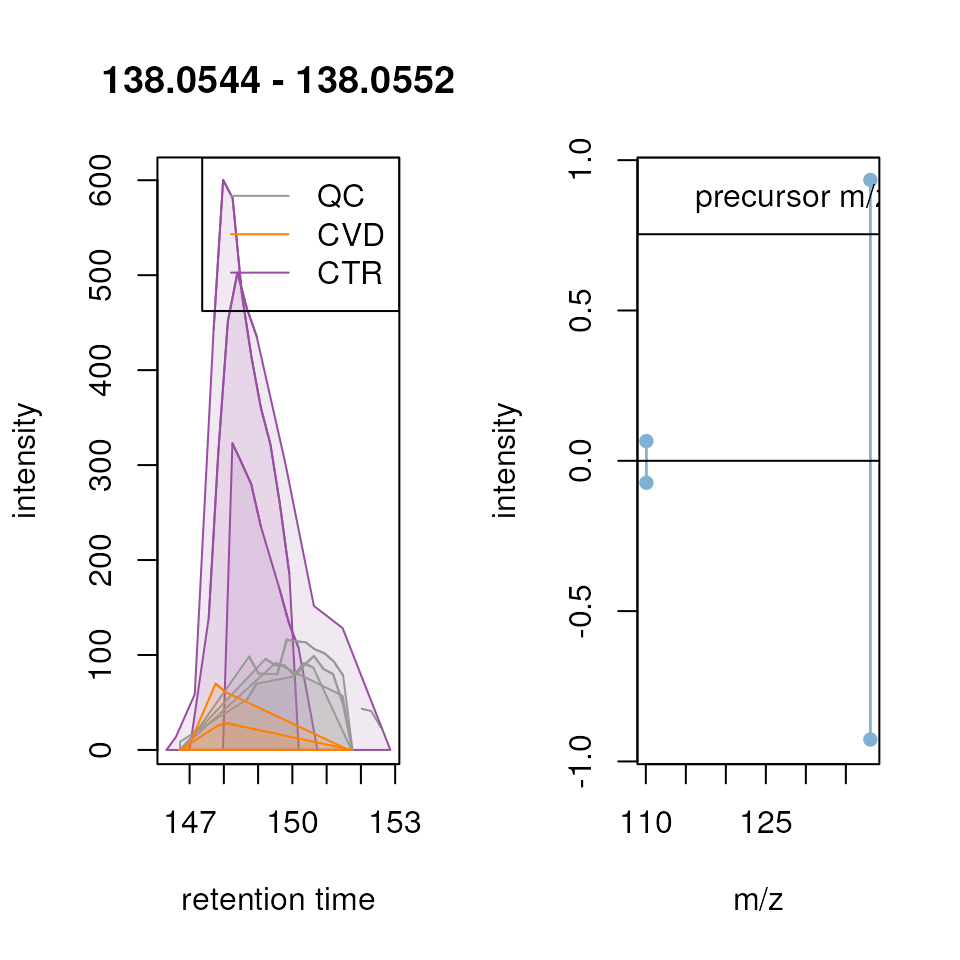

We can observe that the peaks are now grouped more accurately into a single feature for each test ion. The other important parameters optimized here are:

binsize: Our data was generated on a high resolution MS instrument, thus we select a low value for this paramete.ppm: For TOF instruments, it is suggested to use a value forppmlarger than 0 to accommodate the higher measurement error of the instrument for larger m/z values.minFraction: We set it tominFraction = 0.75, hence defining features only if a chromatographic peak was identified in at least 75% of the samples of one of the sample groups.sampleGroups: We use the information available in oursampleData’s"phenotype"column.

#' Define the settings for the param

param <- PeakDensityParam(sampleGroups = sampleData(data)$phenotype,

minFraction = 0.75, binSize = 0.01, ppm = 10,

bw = 1.8)

#' Apply to whole data

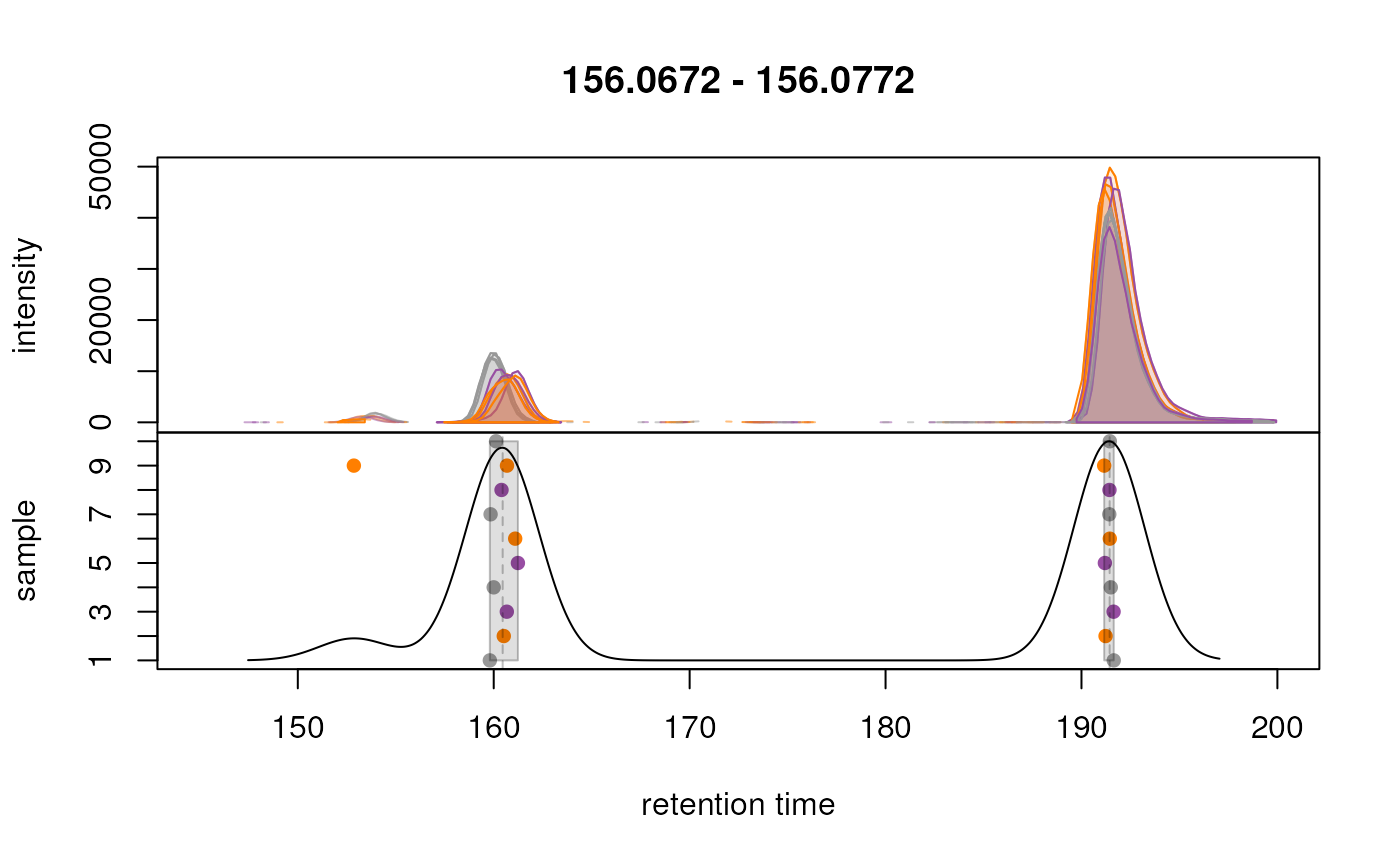

data <- groupChromPeaks(data, param = param)After correspondence analysis it is suggested to evaluate the results

again for selected EICs. Below we extract signal for an m/z

similar to that of the isotope labeled methionine for a larger retention

time range. Importantly, to show the actual correspondence results, we

set simulate = FALSE for the

plotChromPeakDensity() function.

#' Extract chromatogram for an m/z similar to the one of the labeled methionine

chr_test <- chromatogram(data,

mz = as.matrix(intern_standard["methionine_13C_15N",

c("mzmin", "mzmax")]),

rt = c(145, 200),

aggregationFun = "max")

plotChromPeakDensity(

chr_test, simulate = FALSE,

col = paste0(col_sample, "80"),

peakCol = col_sample[chromPeaks(chr_test)[, "sample"]],

peakBg = paste0(col_sample[chromPeaks(chr_test)[, "sample"]], 20),

peakPch = 16)

As hoped, signal from the two different ions are now grouped into separate features. Generally, correspondence results should be evaluated on more such extracted chromatograms.

Another interesting information to look a the the distribution of these features along the retention time axis.

# Bin features per RT slices

vc <- featureDefinitions(data)$rtmed

breaks <- seq(0, max(vc, na.rm = TRUE) + 1, length.out = 15) |>

round(0)

cuts <- cut(vc, breaks = breaks, include.lowest = TRUE)

table(cuts) |>

pandoc.table(

style = "rmarkdown",

caption = "Table 5.Distribution of features along the retention time axis (in seconds.")| [0,17] | (17,34] | (34,51] | (51,68] | (68,86] | (86,103] | (103,120] |

|---|---|---|---|---|---|---|

| 15 | 2608 | 649 | 24 | 132 | 11 | 15 |

| (120,137] | (137,154] | (154,171] | (171,188] | (188,205] | (205,222] |

|---|---|---|---|---|---|

| 105 | 1441 | 2202 | 410 | 869 | 554 |

| (222,240] |

|---|

| 33 |

The results from the correspondence analysis are now stored, along

with the results from the other preprocessing steps, within our

XcmsExperiment result object. The correspondence results,

i.e., the definition of the LC-MS features, can be extracted using the

featureDefinitions() function.

#' Definition of the features

featureDefinitions(data) |>

head()## mzmed mzmin mzmax rtmed rtmin rtmax npeaks CTR CVD QC

## FT0001 50.98979 50.98949 50.99038 203.6001 203.1181 204.2331 8 1 3 4

## FT0002 51.05904 51.05880 51.05941 191.1675 190.8787 191.5050 9 2 3 4

## FT0003 51.98657 51.98631 51.98699 203.1467 202.6406 203.6710 7 0 3 4

## FT0004 53.02036 53.02009 53.02043 203.2343 202.5652 204.0901 10 3 3 4

## FT0005 53.52080 53.52051 53.52102 203.1936 202.8490 204.0901 10 3 3 4

## FT0006 54.01007 54.00988 54.01015 159.2816 158.8499 159.4484 6 1 3 2

## peakidx ms_level

## FT0001 7702, 16.... 1

## FT0002 7176, 16.... 1

## FT0003 7680, 17.... 1

## FT0004 7763, 17.... 1

## FT0005 8353, 17.... 1

## FT0006 5800, 15.... 1This data frame provides the average m/z and retention time

(in columns "mzmed" and "rtmed") that

characterize a LC-MS feature. Column, "peakidx" contains

the indices of all chromatographic peaks that were assigned to that

feature. The actual abundances for these features, which represent also

the final preprocessing results, can be extracted with the

featureValues() function:

#' Extract feature abundances

featureValues(data, method = "sum") |>

head()## MS_QC_POOL_1_POS.mzML MS_A_POS.mzML MS_B_POS.mzML MS_QC_POOL_2_POS.mzML

## FT0001 421.6162 689.2422 NA 481.7436

## FT0002 710.8078 875.9192 NA 693.6997

## FT0003 445.5711 613.4410 NA 497.8866

## FT0004 16994.5260 24605.7340 19766.707 17808.0933

## FT0005 3284.2664 4526.0531 3521.822 3379.8909

## FT0006 10681.7476 10009.6602 NA 10800.5449

## MS_C_POS.mzML MS_D_POS.mzML MS_QC_POOL_3_POS.mzML MS_E_POS.mzML

## FT0001 NA 635.2732 439.6086 570.5849

## FT0002 781.2416 648.4344 700.9716 1054.0207

## FT0003 NA 634.9370 449.0933 NA

## FT0004 22780.6683 22873.1061 16965.7762 23432.1252

## FT0005 4396.0762 4317.7734 3270.5290 4533.8667

## FT0006 NA 7296.4262 NA 9236.9799

## MS_F_POS.mzML MS_QC_POOL_4_POS.mzML

## FT0001 579.9360 437.0340

## FT0002 534.4577 711.0361

## FT0003 461.0465 232.1075

## FT0004 22198.4607 16796.4497

## FT0005 4161.0132 3142.2268

## FT0006 6817.8785 NAWe can note that some features (e.g. F0003 and F0006) have missing values in some samples. This is expected to a certain degree as not in all samples features, respectively their ions, need to be present. We will address this in the next section.

Gap filling

The previously observed missing values (NA) could be attributed to various reasons. Although they might represent a genuinely missing value, indicating that an ion (feature) was truly not present in a particular sample, they could also be a result of a failure in the preceding chromatographic peak detection step. It is crucial to be able to recover missing values of the latter category as much as possible to reduce the eventual need for data imputation. We next examine how prevalent missing values are in our present dataset:

#' Percentage of missing values

sum(is.na(featureValues(data))) /

length(featureValues(data)) * 100## [1] 26.41597We can observe a substantial number of missing values values in our dataset. Let’s therefore delve into the process of gap-filling. We first evaluate some example features for which a chromatographic peak was only detected in some samples:

ftidx <- which(is.na(rowSums(featureValues(data))))

fts <- rownames(featureDefinitions(data))[ftidx]

farea <- featureArea(data, features = fts[1:2])

chromatogram(data[c(2, 3)],

rt = farea[, c("rtmin", "rtmax")],

mz = farea[, c("mzmin", "mzmax")]) |>

plot(col = c("red", "blue"), lwd = 2)

In both instances, a chromatographic peak was only identified in one

of the two selected samples (red line), hence a missing value is

reported for this feature in those particular samples (blue line).

However, in both cases, signal was measured in both samples, thus,

reporting a missing value would not be correct in this example. The

signal for this feature is very low, which is the most likely reason

peak detection failed. To rescue signal in such cases, the

fillChromPeaks() function can be used with the

ChromPeakAreaParam approach. This method defines an

m/z-retention time area for each feature based on detected

peaks, where the signal for the respective ion is expected. It then

integrates all intensities within this area in samples that have missing

values for that feature. This is then reported as feature abundance.

Below we apply this method using the default parameters.

#' Fill in the missing values in the whole dataset

data <- fillChromPeaks(data, param = ChromPeakAreaParam(), chunkSize = 5)

#' Percentage of missing values after gap-filling

sum(is.na(featureValues(data))) /

length(featureValues(data)) * 100## [1] 5.155492With fillChromPeaks() we could thus rescue most of the

missing data in the data set. Note that, even if in a sample no ion

would be present, in the worst case noise would be integrated, which is

expected to be much lower than actual chromatographic peak signal. Let’s

look at our previously missing values again:

After gap-filling, also in the blue colored sample a chromatographic peak is present and its peak area would be reported as feature abundance for that sample.

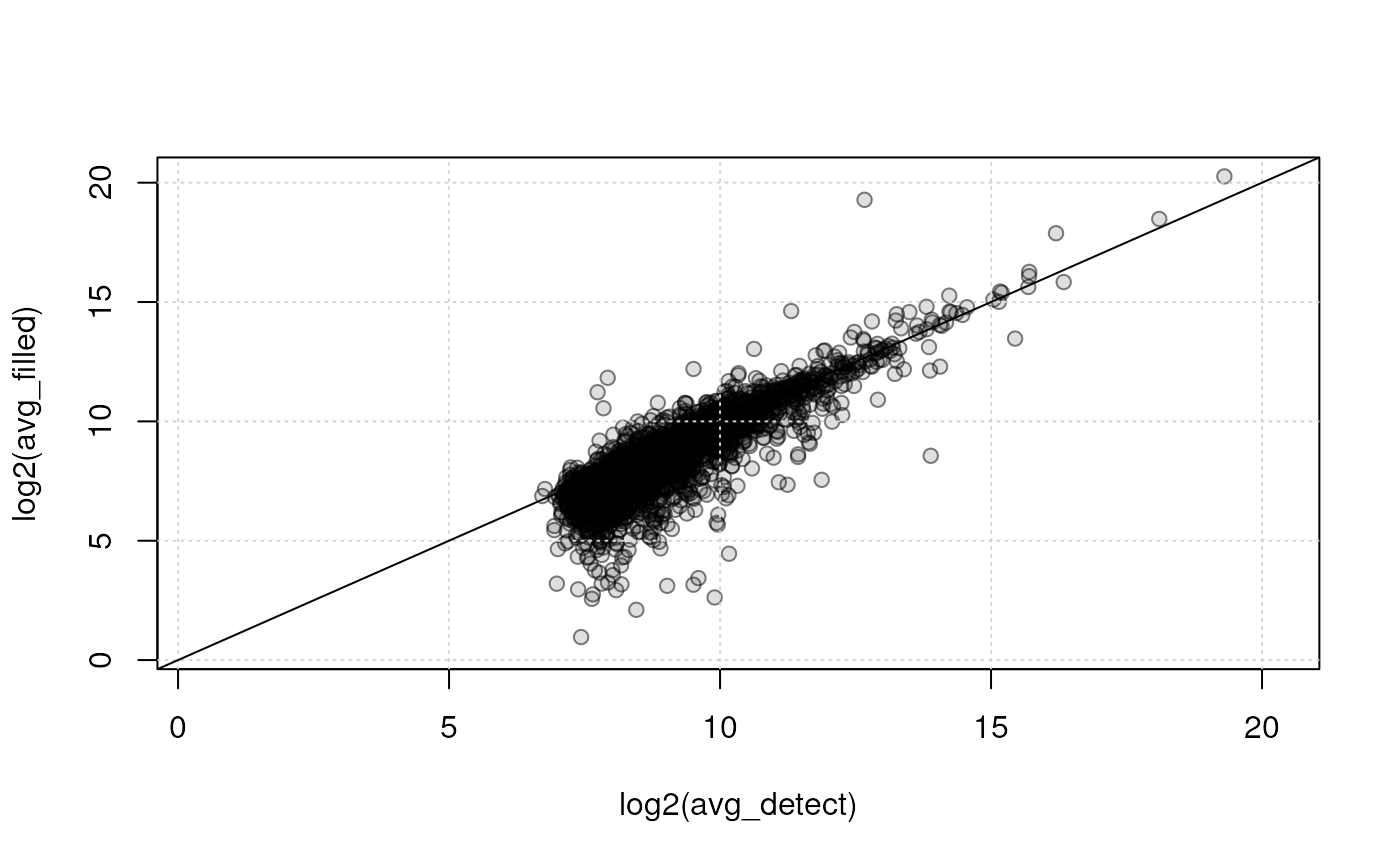

To further assess the effectiveness of the gap-filling method for rescuing signals, we can also plot the average signal of features with at least one missing value against the average of filled-in signal. It is advisable to perform this analysis on repeatedly measured samples; in this case, our QC samples will be used.

For this, we extract:

Feature values from detected chromatographic peaks by setting

filled = FALSEin thefeaturesValues()call.The filled-in signal by first extracting both detected and gap-filled abundances and then replace the values for detected chromatographic peaks with

NA.

Then, we calculate the row averages of both of these matrices and plot them against each other.

#' Get only detected signal in QC samples

vals_detect <- featureValues(data, filled = FALSE)[, QC_samples]

#' Get detected and filled-in signal

vals_filled <- featureValues(data)[, QC_samples]

#' Replace detected signal with NA

vals_filled[!is.na(vals_detect)] <- NA

#' Identify features with at least one filled peak

has_filled <- is.na(rowSums(vals_detect))

#' Calculate row averages for features with missing values

avg_detect <- rowMeans(vals_detect[has_filled, ], na.rm = TRUE)

avg_filled <- rowMeans(vals_filled[has_filled, ], na.rm = TRUE)

#' Plot the values against each other (in log2 scale)

plot(log2(avg_detect), log2(avg_filled),

xlim = range(log2(c(avg_detect, avg_filled)), na.rm = TRUE),

ylim = range(log2(c(avg_detect, avg_filled)), na.rm = TRUE),

pch = 21, bg = "#00000020", col = "#00000080")

grid()

abline(0, 1)

The detected (x-axis) and gap-filled (y-axis) values for QC samples are highly correlated. Especially for higher abundances, the agreement is very high, while for low intensities, as can be expected, differences are higher and trending to below the correlation line. Below we, in addition, fit a linear regression line to the data and summarize its results

##

## Call:

## lm(formula = log2(avg_filled) ~ log2(avg_detect))

##

## Residuals:

## Min 1Q Median 3Q Max

## -6.8176 -0.3807 0.1725 0.5492 6.7504

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -1.62359 0.11545 -14.06 <2e-16 ***

## log2(avg_detect) 1.11763 0.01259 88.75 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.9366 on 2846 degrees of freedom

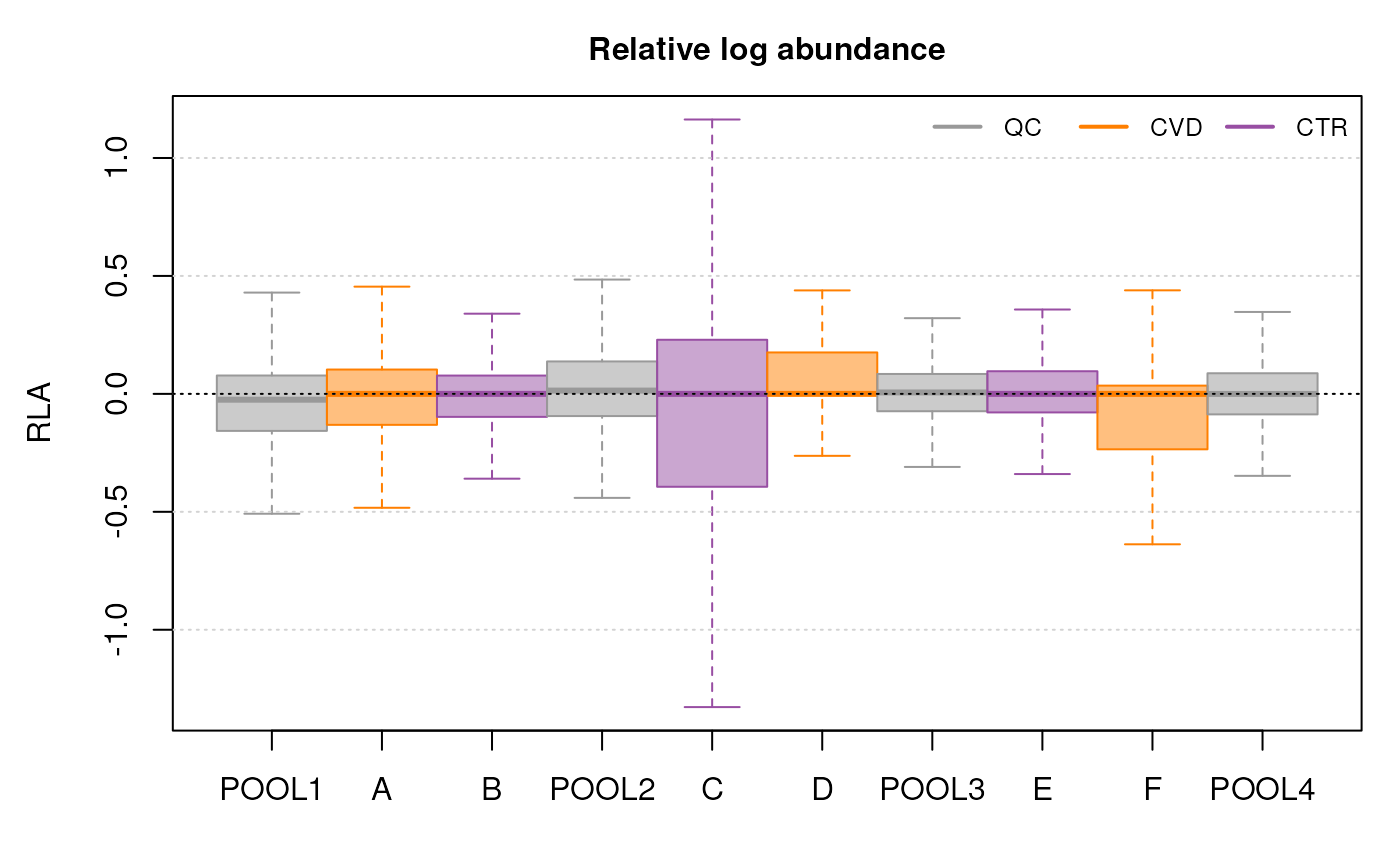

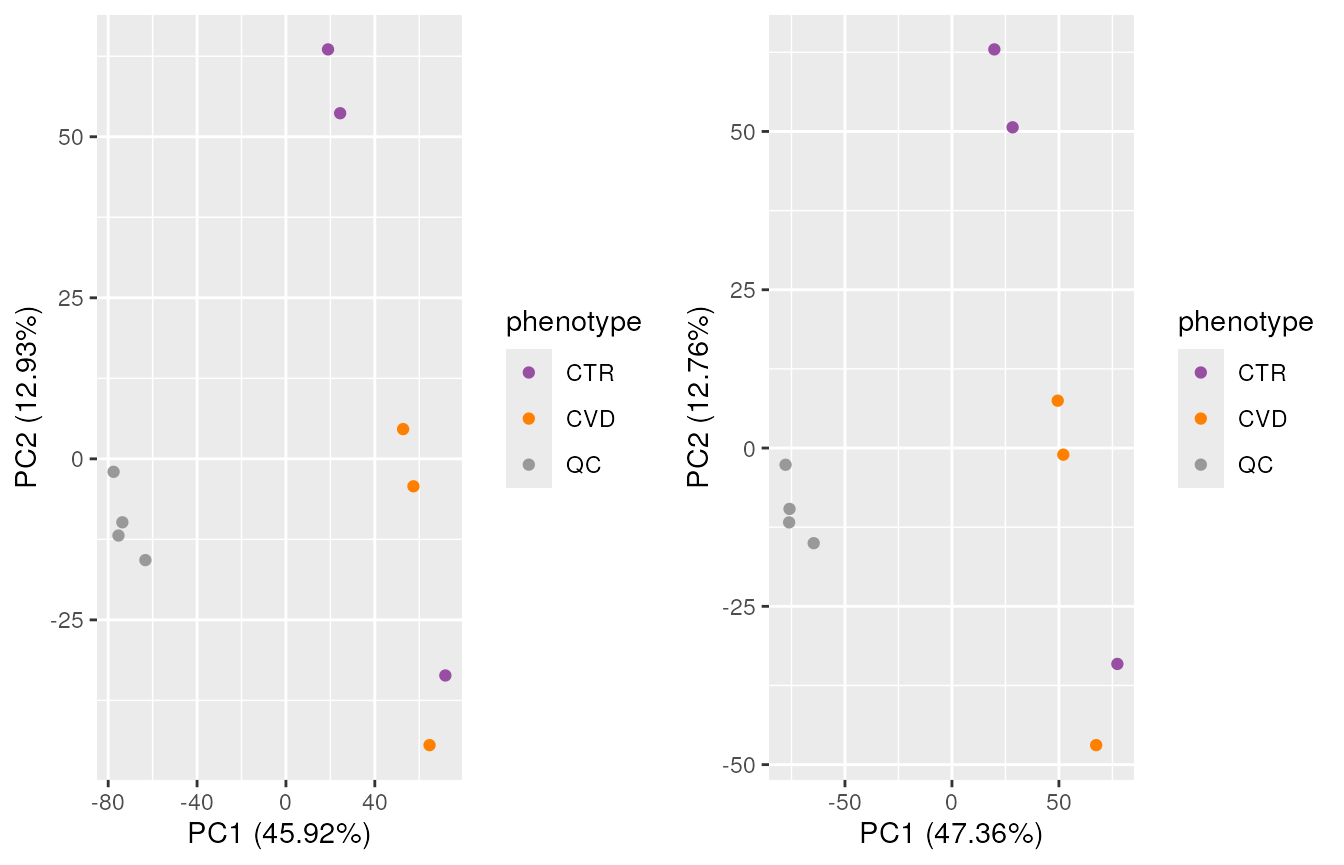

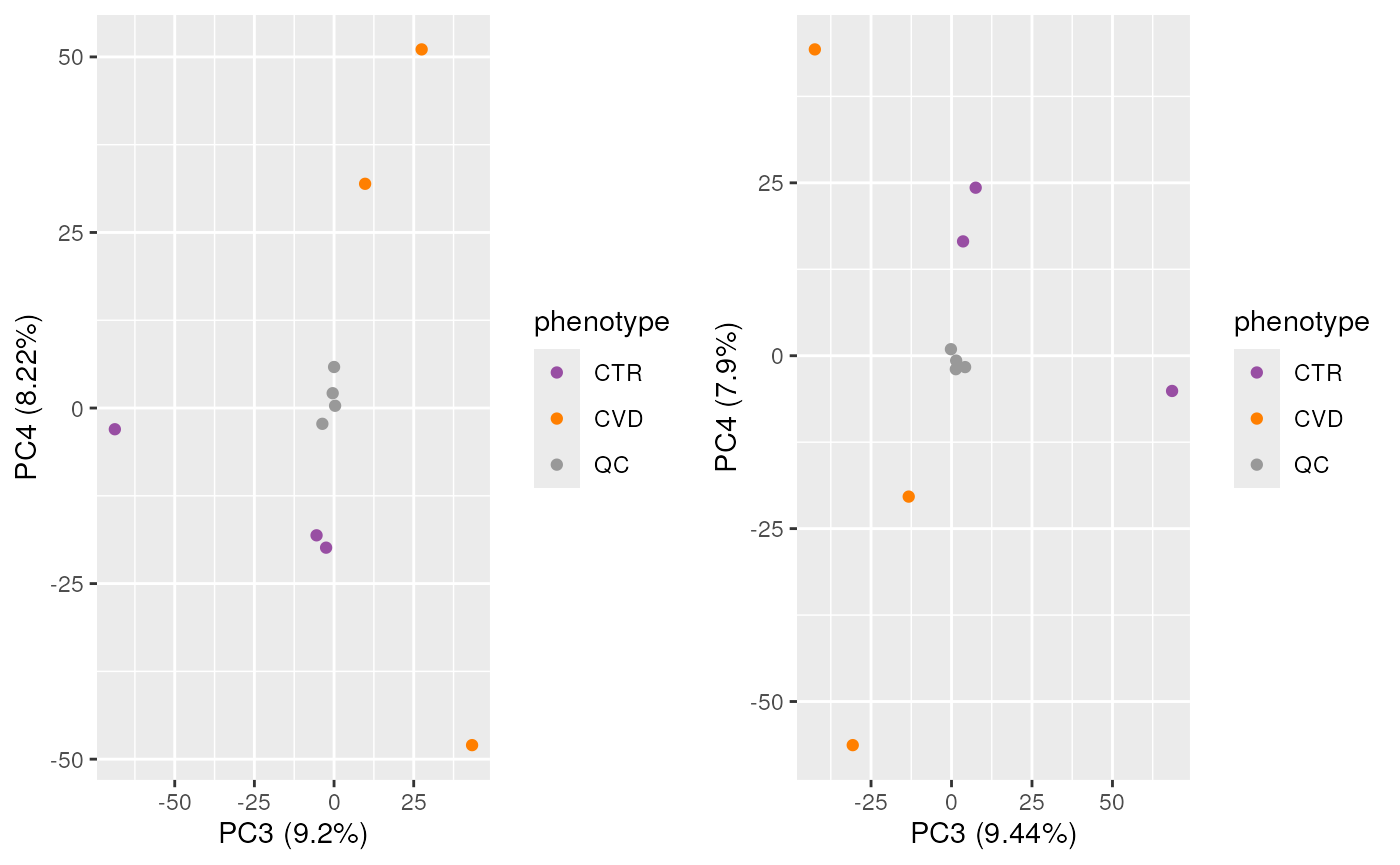

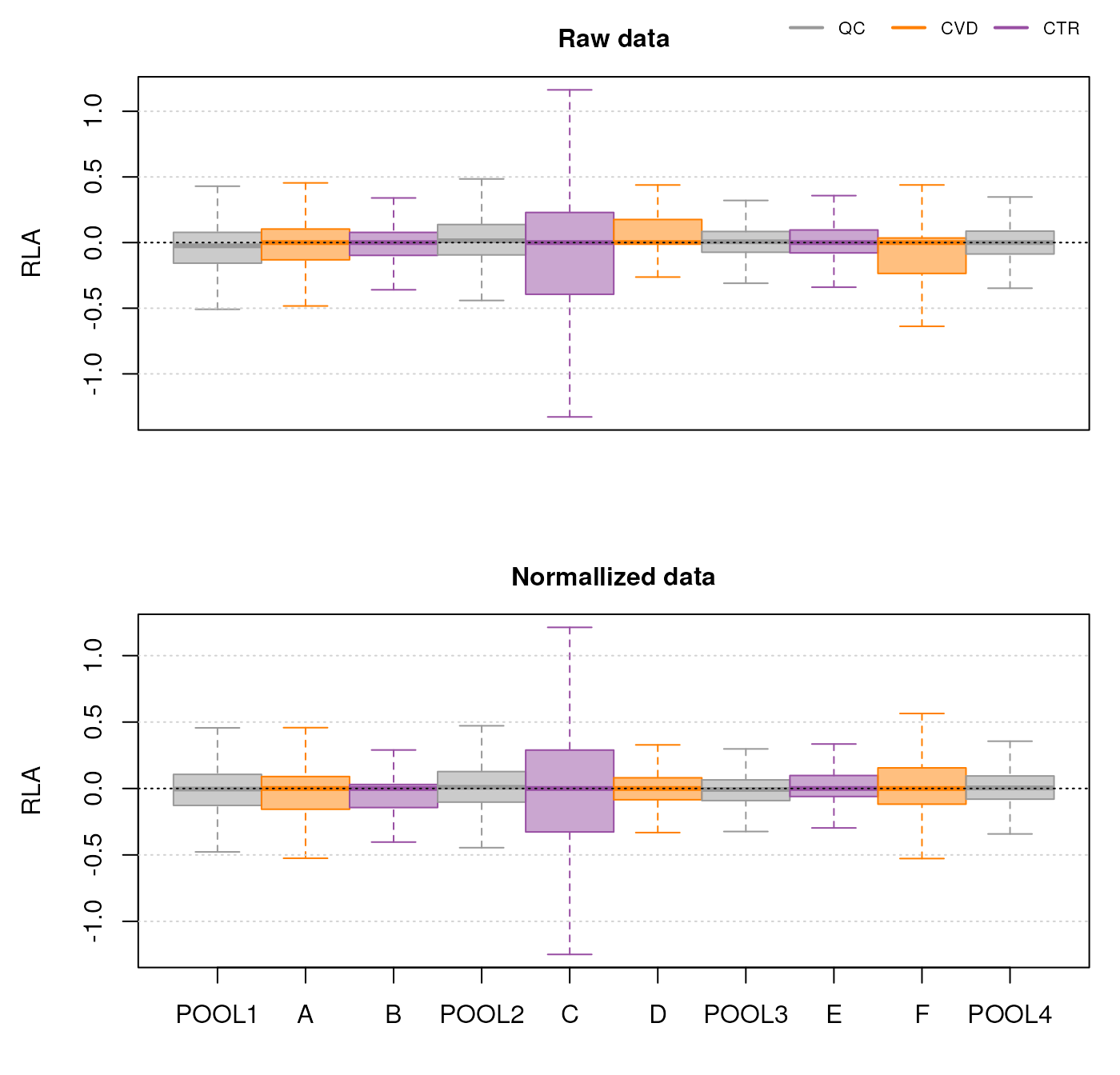

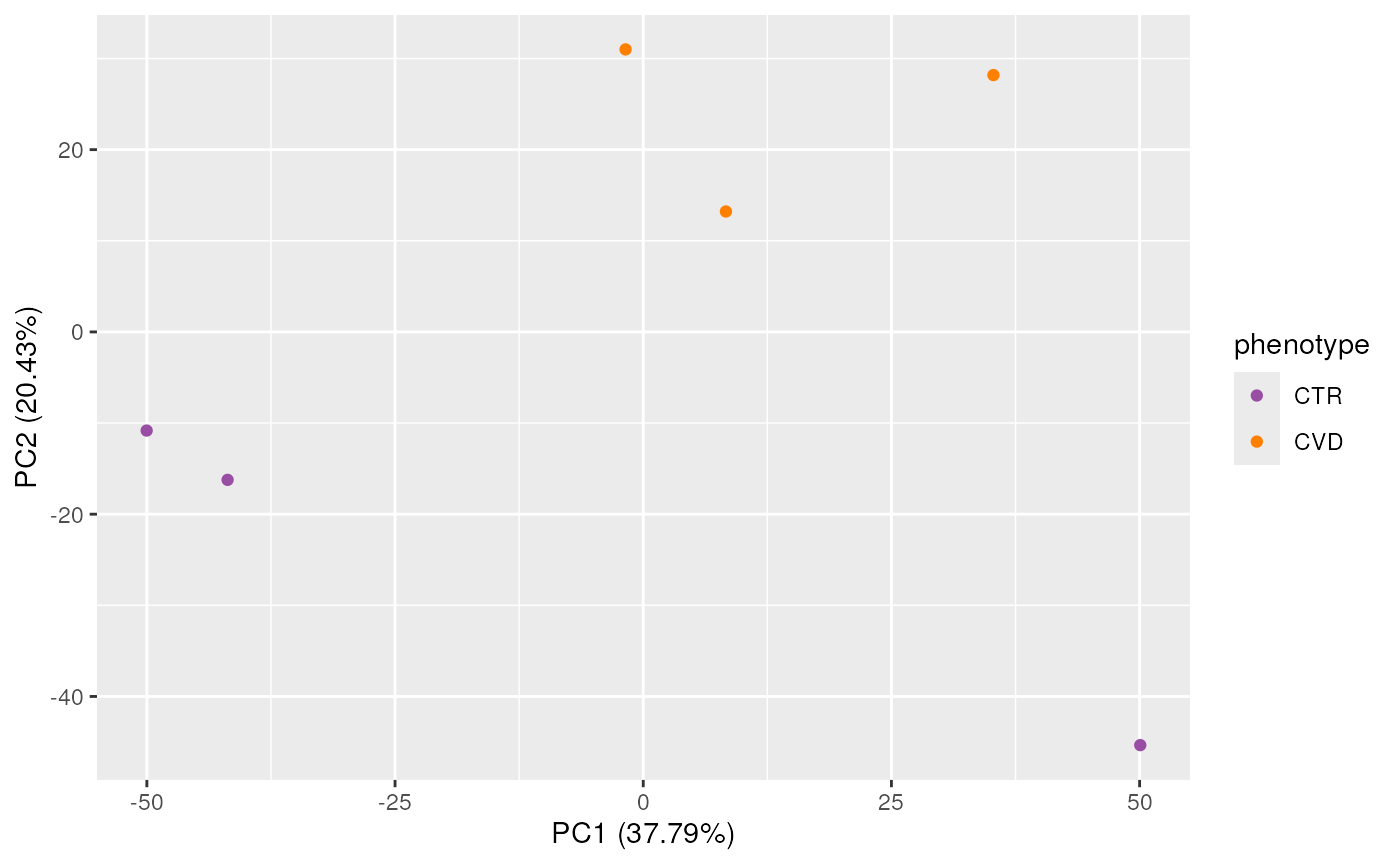

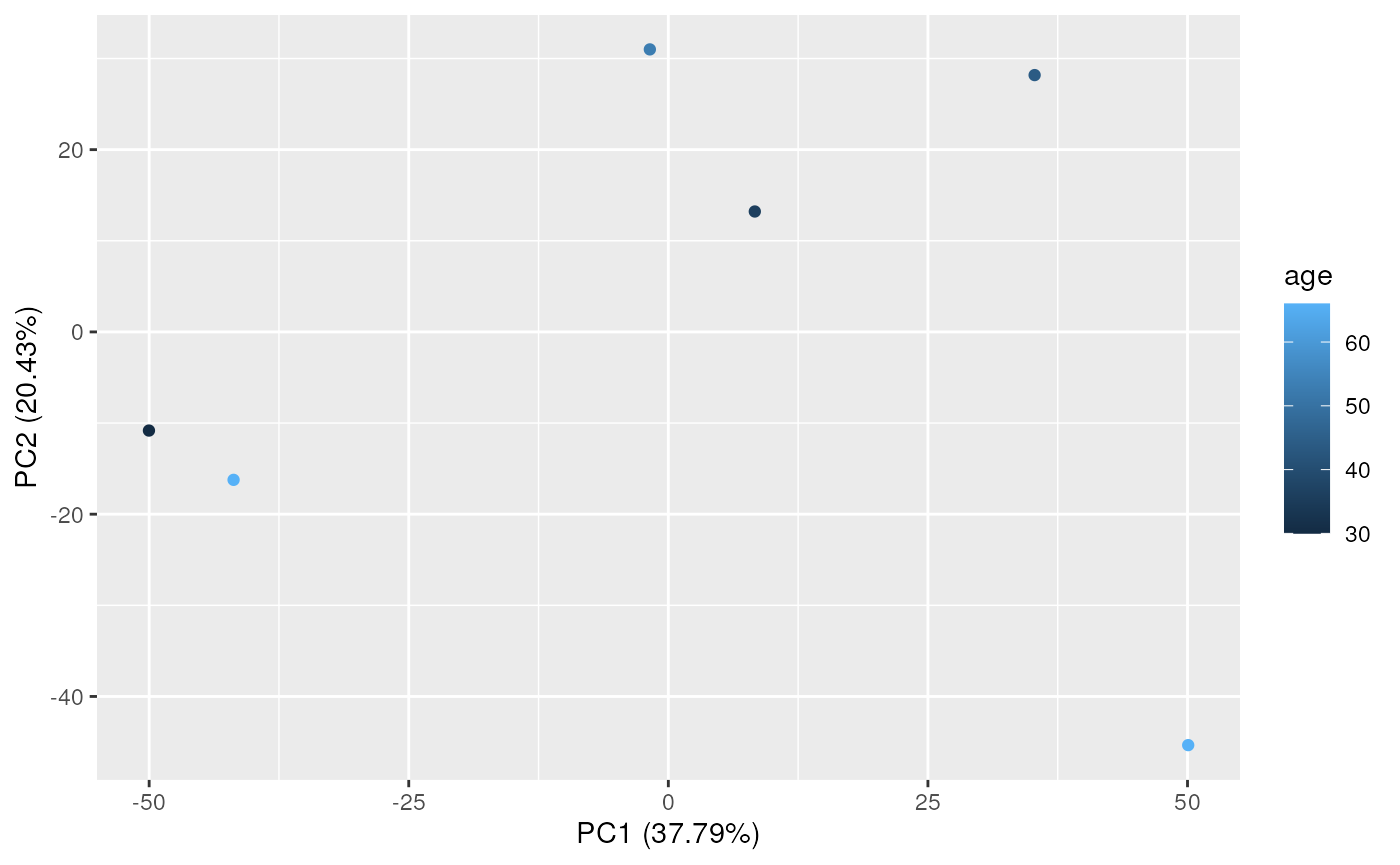

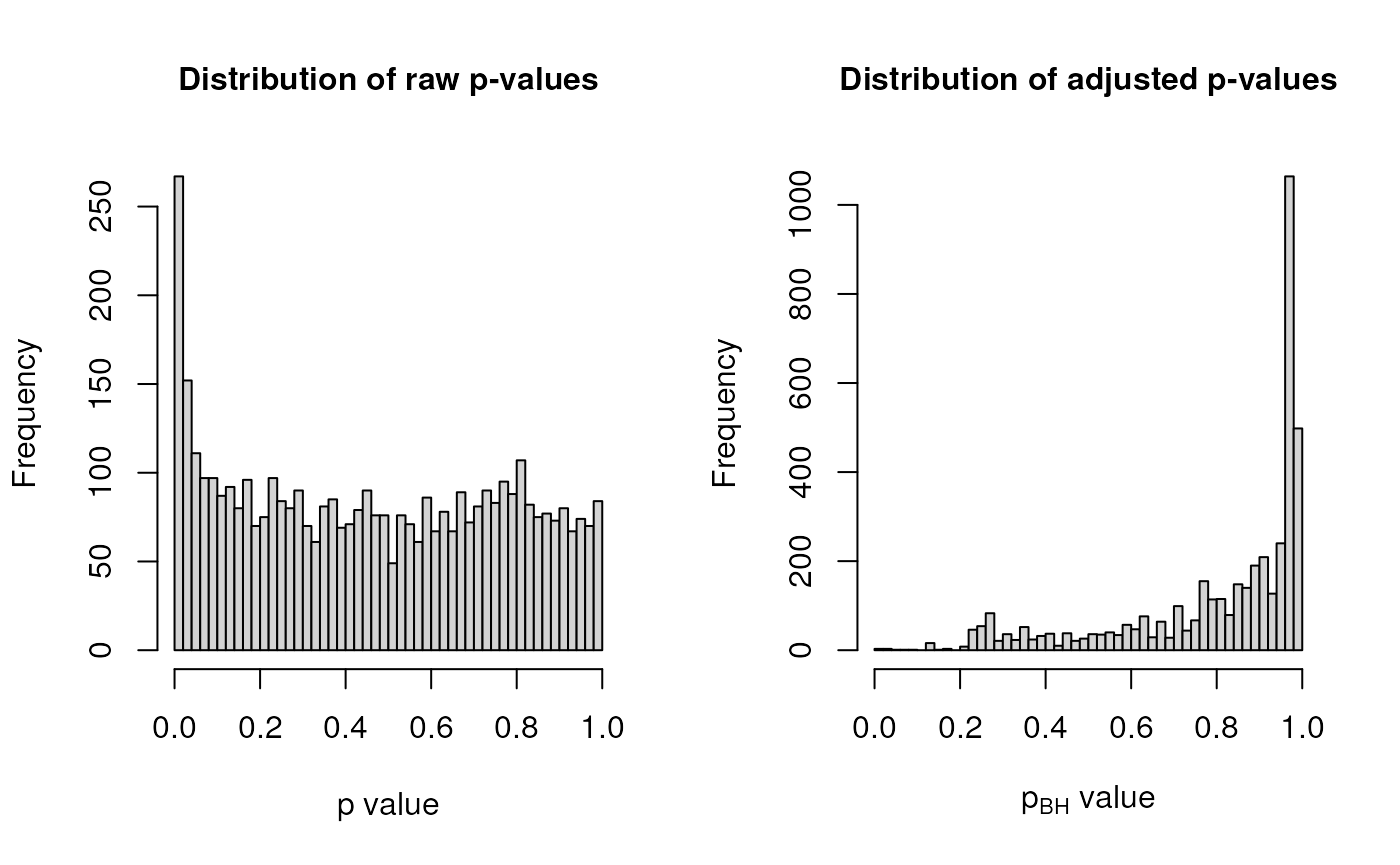

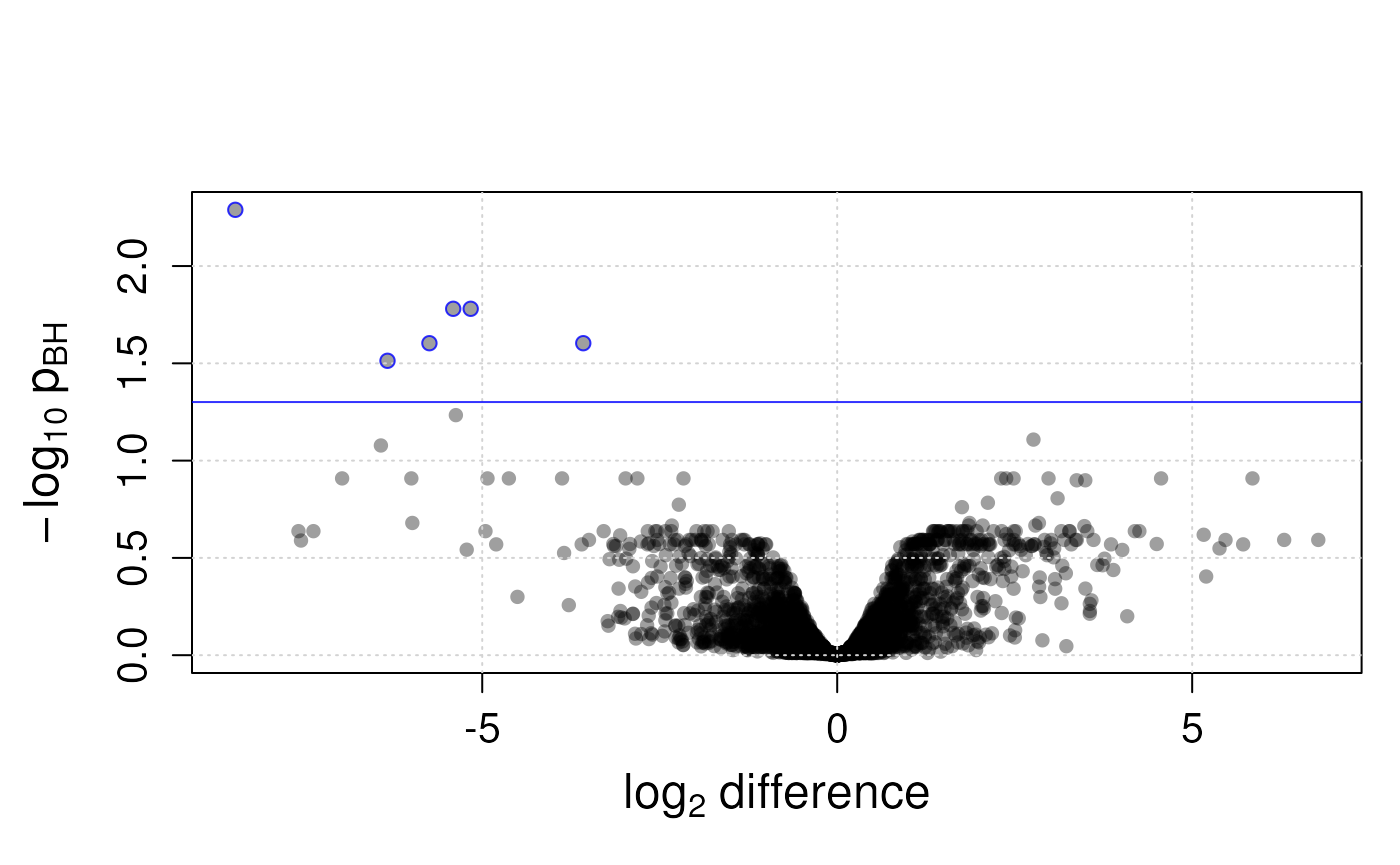

## (846 observations deleted due to missingness)